Category: coreboot

OSFC 2021 – Going Full Open-Source

OSFC 2020 was a blast - we had great talks and discussions, some virtual beers and a lot of fun - and OSFC 2021 is just around the corner!

As remote conferences are new to us (and probably to everyone else as well) we gathered feedback from you - How did you liked the conference and on what bits can we improve to make the experience better next time. Of course, everyone here at the OSFC hoped that we can do a conference in person again - sadly that has to be postponed to next year. So here we are again - going full virtual!

The overall feedback from you was good - however there was some feedback on the tools used for the OSFC that we like to share. Our main tool for running the conferences including the ticketing system was hopin.com - even though we liked the overall experience, we also noticed some minor problems around the platform:

OSFC 2020 was a blast - we had great talks and discussions, some virtual beers and a lot of fun - and OSFC 2021 is just around the corner!

As remote conferences are new to us (and probably to everyone else as well) we gathered feedback from you - How did you liked the conference and on what bits can we improve to make the experience better next time. Of course, everyone here at the OSFC hoped that we can do a conference in person again - sadly that has to be postponed to next year. So here we are again - going full virtual!

The overall feedback from you was good - however there was some feedback on the tools used for the OSFC that we like to share. Our main tool for running the conferences including the ticketing system was hopin.com - even though we liked the overall experience, we also noticed some minor problems around the platform:

- The billing and ticketing system was quite simple. We were unable to produce invoices from bought tickets which resulted in us generating invoices manually for each attendee.

- We got feedback from various people with different operating systems and browser setups that the stream was not working for them. We tried to resolve as many as possible - however there was not a clear set of which browser does not work on with operating system.

- Sometimes the system was rather slow - and the chat was a bit complicated to handle

- One big feedback point was that the system was not open - Either accessing the stream directly nor the platform itself was open-source - especially for an open-source conference that's something we put some thoughts in.

With this year's OSFC going virtual again, we put some thoughts into the technology we're planning to use throughout the event. Last time, we were in a rush - flipping everything over from physical to virtual. This year, we took our time and looked into various solution and came up with a plan.

We hope you liked the new approach we are taking with the OSFC and we try to stick to the open-source spirit as close as possible. Let's see that we all have a great OSFC experience! We are looking forward to build a virtual space for you again - and are looking for great talks and discussion around open-source firmware!

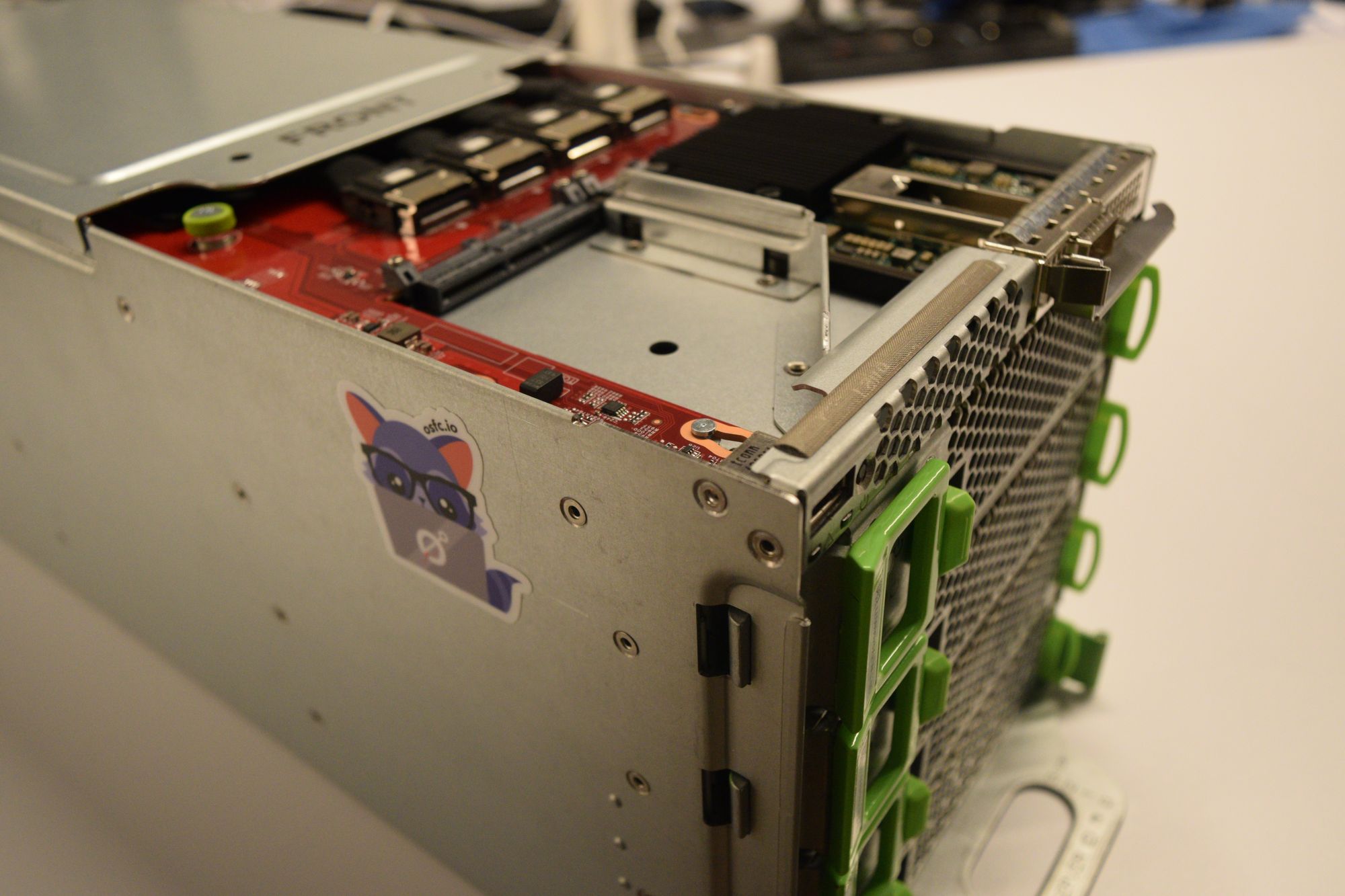

Coreboot on the ASRock E3C246D4I

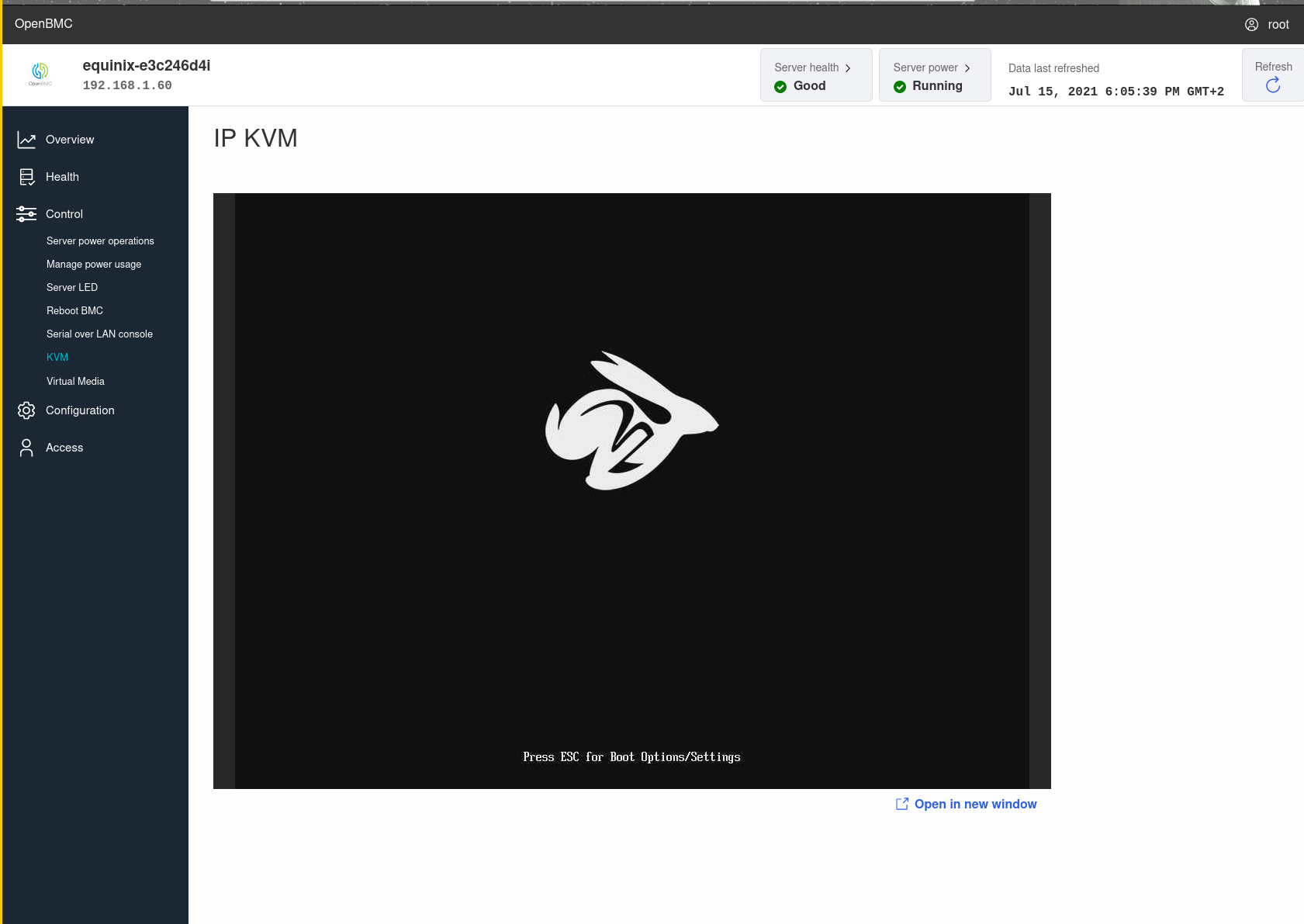

A new toy to play with OpenBMC

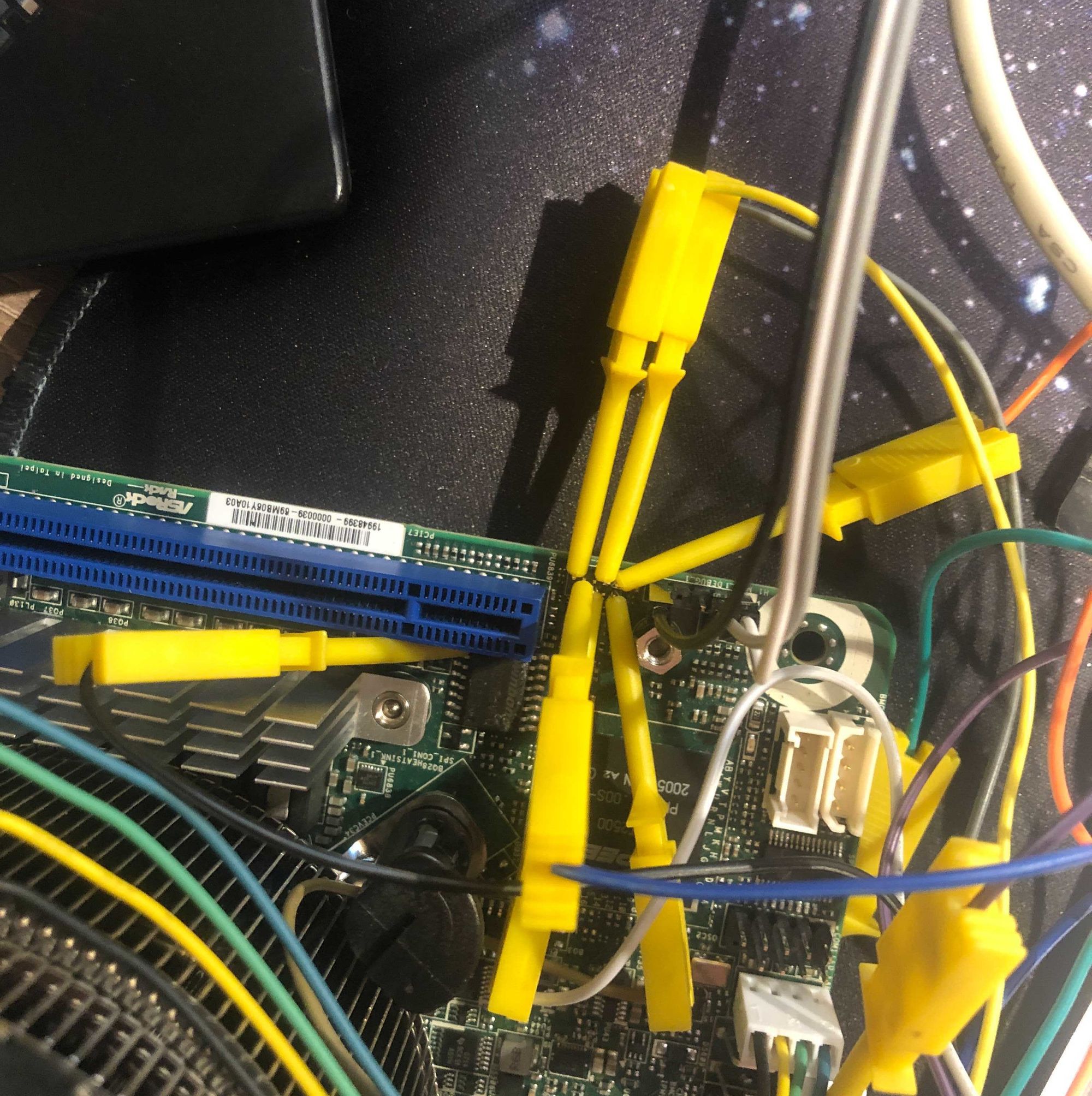

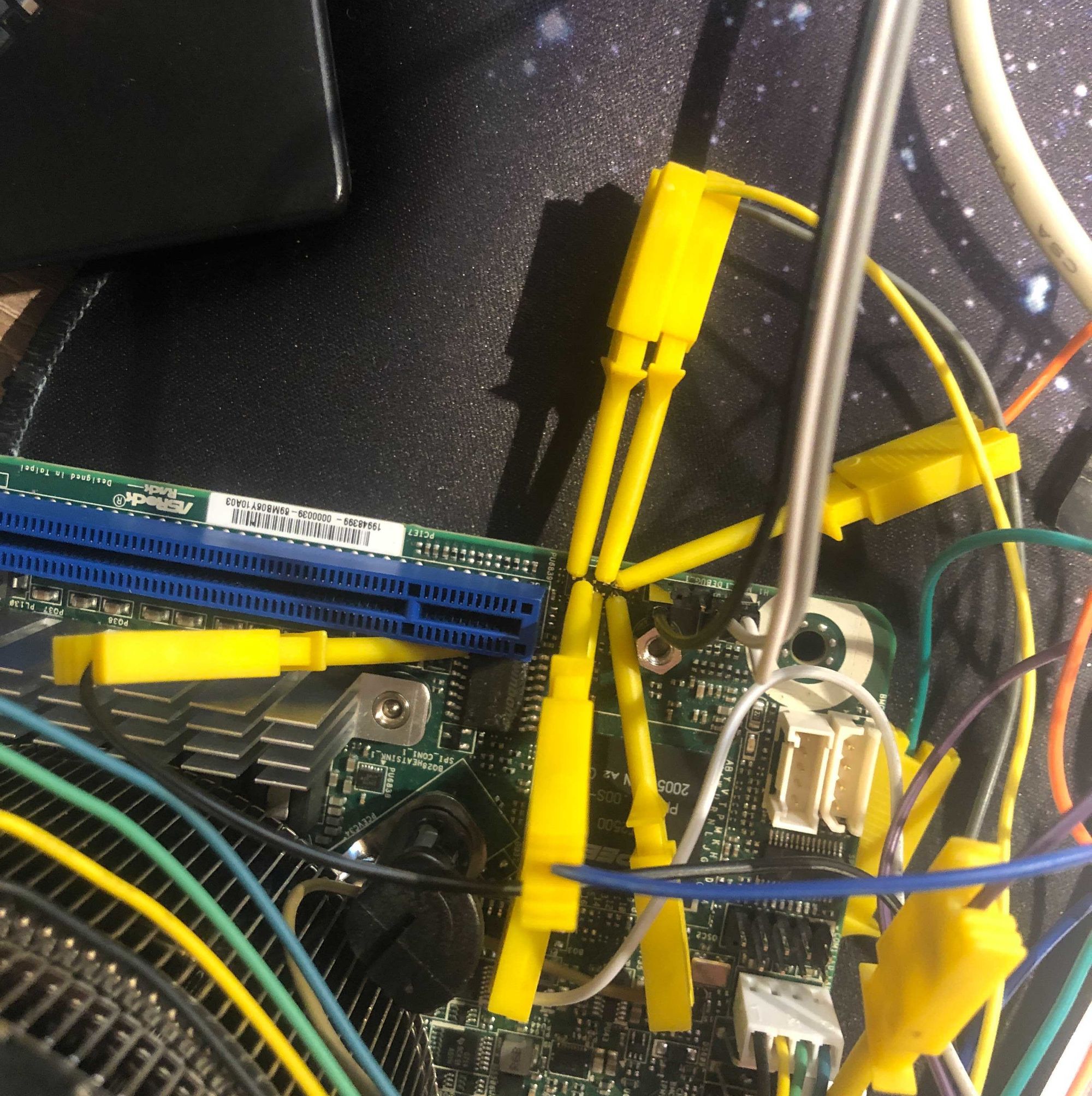

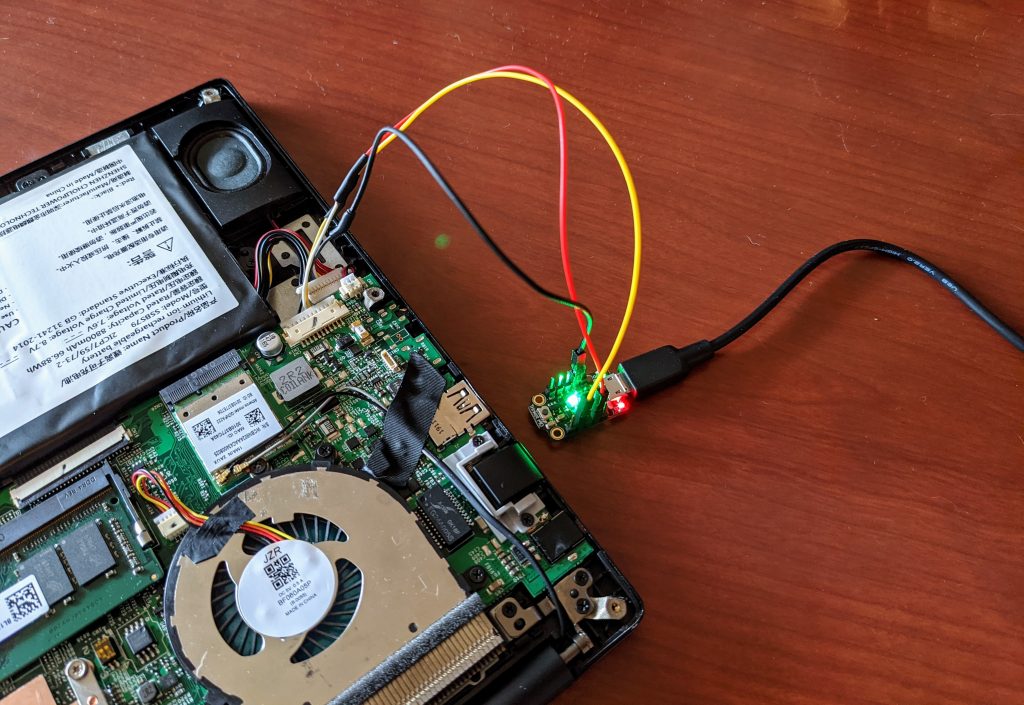

I wanted to play around with OpenBMC on a physical board and this article led me to the ASRock E3C246D4I. It's a not overly expensive Intel Coffee Lake board featuring an Aspeed AST2500 BMC. So the first thing I did was to compile OpenBMC. My computer was in for a quite a chore there. It needed to download 11G of sources and compile those. Needless to say this takes a long time on a notebook computer and is best done overnight. I flashed the image via the SPI header next to the BMC flash. I used some mini crocodile clips to do this at first.

I wanted to play around with OpenBMC on a physical board and this article led me to the ASRock E3C246D4I. It's a not overly expensive Intel Coffee Lake board featuring an Aspeed AST2500 BMC. So the first thing I did was to compile OpenBMC. My computer was in for a quite a chore there. It needed to download 11G of sources and compile those. Needless to say this takes a long time on a notebook computer and is best done overnight. I flashed the image via the SPI header next to the BMC flash. I used some mini crocodile clips to do this at first.  To set up a nice way to play around with OpenBMC I attempted to hook up an EM100Pro, a flash emulator via the header. This did not seem to work. I'm not sure what was going on here. It looked like the real flash chip was not reliably put on HOLD by the EM100. When tracing the SPI commands with the EM100 0xff was the response to all the (fast)read commands. I guess a fast SPI programmer will do for now in the future. OpenBMC makes updates quite easy though: just copy the image-bmc to /run/initramfs/ and rebooting will launch an update which takes a minute or so (faster than my external programmer).

So what can OpenBMC do on that board? Not much, it seemed at first. Powering the board on and off did not work and not much else either. The author of the port, Zev Zweiss, helped me a lot to get things working though. A bit of manual gpio magic and powering on and off works well. So the upstream code needs a bit of polish to get working but using the branch from the original author of this board port fared much better: sensors and power control work fine. Fan control is not implemented though, but I might look into that. Max fan speeds might be ok in a datacenter but not in my home office for sure!

To set up a nice way to play around with OpenBMC I attempted to hook up an EM100Pro, a flash emulator via the header. This did not seem to work. I'm not sure what was going on here. It looked like the real flash chip was not reliably put on HOLD by the EM100. When tracing the SPI commands with the EM100 0xff was the response to all the (fast)read commands. I guess a fast SPI programmer will do for now in the future. OpenBMC makes updates quite easy though: just copy the image-bmc to /run/initramfs/ and rebooting will launch an update which takes a minute or so (faster than my external programmer).

So what can OpenBMC do on that board? Not much, it seemed at first. Powering the board on and off did not work and not much else either. The author of the port, Zev Zweiss, helped me a lot to get things working though. A bit of manual gpio magic and powering on and off works well. So the upstream code needs a bit of polish to get working but using the branch from the original author of this board port fared much better: sensors and power control work fine. Fan control is not implemented though, but I might look into that. Max fan speeds might be ok in a datacenter but not in my home office for sure!

Coreboot

The host flash is muxed to the BMC SPI pins so the BMC can easily (re)flash the host firmware (and is even faster at this than the host PCH due to the high SPI frequency the BMC can use). To get that working a few things needed to be done on the BMC. The flash is hooked up to the BMC SPI1 master bus which needs to be declared in the FDT. U-boot needs to set the SPI1 controller in master mode. The mux is controlled via a GPIO. 2 other GPIOs also need to be configured such that the ME on the PCH does not attempt to mess with the firmware while we're flashing (ME_RECOVERY pins). A flash controlled by a BMC is a very comfortable situation for a coreboot developper, who needs to do a dozen reflashes an hour, so hacking on coreboot with this device was a bliss (as soon as I got the uart console working). I don't have the schematics to this board so I'll have to do with what the vendor AMI firmware has set up and decode it from the hardware registers. This worked well: there is a tool to generate the PCH GPIO configuration in util/intelp2m which outputs valid C code that can directly be integrated into coreboot. I build a minimal port based on other Intel Coffeelake boards and after fixing a few issues like the console not working and memory init failing, it seemed to have initialised all the PCI devices more or less correctly and got to the payload! The default payload on X86 with coreboot is SeaBIOS. It looks like this payload does not like this board very much though: it hangs in the menu. I never got to boot anything with it. Tianocore (EDK2) proved a much better match and was able to boot from my HDD attached via USB without any issues. Booting the virtual CD-ROM from the BMC also worked like a charm. You can find the code on gerrit. Most things like USB, the 10G NICs, BMC IP-KVM and BMC Serial on Lan are working with that code.

What's next: Get a LinuxBoot payload working and write some public documentation on how to set things up for OpenBMC and coreboot for this nice board. Maybe I can also get u-bmc working on this board? A few seconds vs a few hours in compiletime does seem like a compelling argument.

You can find the code on gerrit. Most things like USB, the 10G NICs, BMC IP-KVM and BMC Serial on Lan are working with that code.

What's next: Get a LinuxBoot payload working and write some public documentation on how to set things up for OpenBMC and coreboot for this nice board. Maybe I can also get u-bmc working on this board? A few seconds vs a few hours in compiletime does seem like a compelling argument.

Open source cache as ram with Intel Bootguard

FSP-T in open source projects

X86 CPUs boot up in a very bare state. They execute the first instruction at the top of memory mapped flash in 16 bit real mode. DRAM is not avaible (AMD Zen CPUs are the exception) and the CPU typically has no memory addressable SRAM, a feature which is common on ARM SOCs. This makes running C code quite hard because you are required to have a stack. This was solved on x86 using a technique called cache as ram or CAR. Intel calls this non eviction mode or NEM. You can read more about this here. Coreboot has support for setting up and tearing down CAR with two different codepaths:- Using an open source implementation.

- Using a closed source implementation, using FSP-T (TempRamInit) and FSP-M (TempRamExit).

Open source cache as ram with Intel Bootguard

One of the reasons why there still is code to integrate FSP-T inside coreboot is for Intel Bootguard support. Here you can read more on our work with that technology. Open source CAR did not work when the Bootguard ACM was run before reset. So with Bootguard, the first instruction that is run on the main CPU is not the reset vector at0xfffffff0 anymore. The Intel Management Engine, ME validates the Authenticated Code Module or ACM with keys owned by Intel. The ACM code then verifies parts of the main bootfirmware, in this case the coreboot bootblock, with a key owned by the OEM which is fused inside the ME hardware. To do this verification the ACM sets up an execution environment using exactly the same method as the main firmware: using NEM.

The reason that open source cache as ram does not work is because the ACM did already set up NEM. So what needs to be done is to skip the NEM setup. You just want to set up a caching environment for the coreboot CAR, fill those cachelines and leave the rest of setup as is. Bootguard capable CPUs have a readonly MSR, with a bit that indicates if NEM setup has already been done by an ACM. When that is the case a different codepath needs to be taken, that avoids setting up NEM again. See CB:36682 and CB:54010. It looks like filling cachelines for CAR is also a bit more tricky and needs more explicit care CB:55791.

So with very little code we were able to get bootguard working with open source CAR!

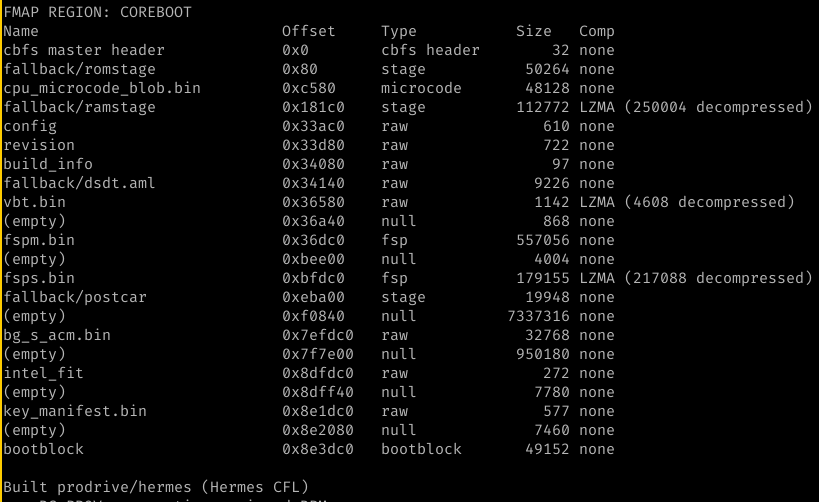

Here you see no fspt.bin in cbfs:

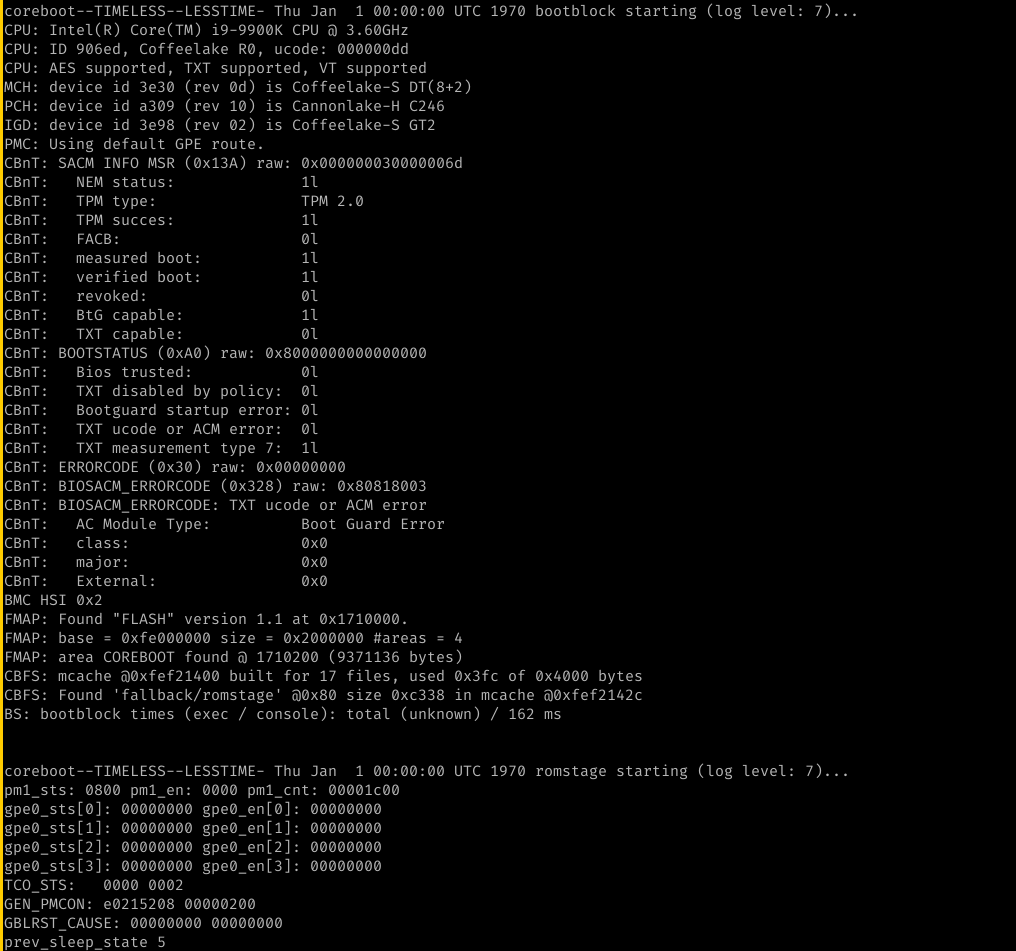

and here you see that bootblock is run with a working console and that romstage is loaded. This means that cache as ram works as intended.

and here you see that bootblock is run with a working console and that romstage is loaded. This means that cache as ram works as intended.

What's next?

Given that all Intel Client silicon now work with open source cache as ram including Bootguard support, there are no reasons to keep FSP-T as a supported option for these platforms. There are however still Intel platforms in the coreboot tree that require FSP-T. Skylake-SP, Cooperlake-SP and Denverton-NS depend on the other early hardware init that is done in FSP-T for which there is no open source equivalent in coreboot. This makes FSP-T mandatory on those platforms, for the time being. The advantages of being in control of the execution environment are overwhelming. From personal experience on working with the Cooperlake SP platform, we did regularly hit issues with FSP-T. Sometimes those were bugs inside the FSP-T code that had to be worked around. On other ocassions it was coreboot making assumptions on the bootflow that were not compatible with FSP being in control of the execution environment. I can firmly say that FSP-T causes more troubles than it actually solves, so having that code open sourced is the best strategy. We hope that by setting this good example with open source Bootguard support, others will be incentivised to not rely on FSP-T but pursue open source solutions.Wrangling the EC: Adventures in Power Sequencing

The Problem

We were preparing to ship out the first batch of Librem 14s right as we were putting the finishing touches on the firmware (both for the embedded controller, and the main coreboot/Pureboot firmware), and everything was looking good to finally get devices flashed and into our users’ hands. But as we flashed the laptops’ firmware to prep for shipping, a small but not insignificant number of devices were failing to boot. At first we thought the issue was with our flashing process, which was new for the Librem 14, as both the EC and main firmware are flashed in sequence on a live system. But re-flashing these problematic devices externally (using a USB flash programmer and a chip clip) did not resolve the issue, so the issue wasn’t with the flashing process itself.Initial Troubleshooting

Since our flashing process didn’t appear to be the issue, the next step was to determine if the issue lie within the EC firmware or coreboot. As it is easier to get live debug output from the EC than coreboot, we started there. We compiled and flashed a debug build of the EC firmware, and attached the debugger. The debug console showed that the EC was booting and properly transitioning the main CPU from off (the S5 power state) to normal boot (the S0 power state). Since the laptop was in S0, it meant that coreboot should be running, so it was time to see what was going on there. Getting debug output from coreboot is slightly more difficult than the EC, as the most common method — serial UART output — isn’t exposed anywhere on the Librem 14’s mainboard. Luckily we have another option: the SPI flash console (developed in-house and upstreamed into coreboot), which writes the coreboot console log to a dedicated region of the firmware flash chip itself. We compiled and flashed a debug build of coreboot with the flash console debugger, attempted a boot, and then read the flash chip back to see where coreboot was dying.Diving Deeper

Reading back the flash console log showed that RAM init (performed by Intel’s FSP-m blob) was failing, but the error code was so generic it provided no clue as to the root cause of the failure. At this point, we’d need to get serial/UART output somehow, in order to get more info from FSP as to the reason for the failure. Reviewing the schematics showed a promising location to get the UART output (TX) line from the CPU, and next-day delivery from a friendly internet retailer for some needed hardware meant we were in business — or so we thought. Despite verifying all of the hardware separately (and verifying coreboot was correctly configured for UART output), no debug output was received. We ordered more hardware, and spent more time verifying all the links in the chain independently, to no avail.

A Little Primer on Power

To give a bit of background, the power sequence to boot a modern CPU (transition from S5 to S0) is a very, very complicated beast. It requires a specific sequence and precise timing between the EC, PCH, and voltage regulators for the enablement of the power rails and “power good” signals. There’s also an additional low power state (DS5 / deep S5) in which the EC sits when it first gets power (either from the internal battery, or an external power source), before anything else happens. So we need to precisely manage the order and timing of turning on power sources (rails) and signals (“power good”) from DS5 to S5 and then to S0.A Fresh Approach

When developing the Librem EC firmware, we didn’t start from a completely blank slate. We based our firmware on System 76’s open EC firmware (we forked it, in free software development terms), and we had the source code to the proprietary EC firmware. Both of these had their own baggage though: the original code was used for a different EC chip, and our board design/layout was very different; the proprietary EC code was a spaghetti mess with headers indicating development in the 2006-2009 time frame, and no comments or documentation. Despite these hurdles, we managed to reverse engineer the power sequencing from the proprietary EC code, and then apply it to the Librem EC code, along with all the other customization needed for the Librem 14. And it worked perfectly on all our development machines. But now with large-scale flashing of production devices, we were experiencing boot failures. We knew that 1) the problem was with RAM initialization, and 2) the problem was in the EC firmware. The EC doesn’t directly control the power to the RAM, but it does tell the main CPU (or more precisely, the platform control hub / PCH) that power to the RAM is on and stable via the aforementioned “power good” signals. These signals tell the PCH that it’s safe to turn on other hardware components, and eventually to bring the CPU out of reset and start the boot process (this is the transition from the S5 power state to S0).Zeroing In

Figuring that these “power good” signals were a good place to start looking, we again compared the proprietary EC code to our Librem EC firmware. This reexamination revealed that although we’d matched the sequence and timing of the power rails/signals, the way certain functions were called in the Librem EC code meant that there was some variability in the timing of the enablement of two of the “power good” signals. Under certain conditions, it was possible for them to be turned on too early, before the voltage regulator had stabilized the power to the RAM. Having identified the potential root cause, we adjusted the Librem EC code to ensure those signals didn’t turn on until the voltage regulator indicated the RAM was ready, crossed our fingers, and began testing. To our collective relief, this small adjustment to the power sequencing did indeed fix the issue. Our factory images were updated, and all previously “bricked” Librem 14s were updated with the new Librem EC firmware.Hardware is Funny Like That

But the issue only affected a small percentage of Librem 14s – why were some affected and not others? That’s a damn good question, and one we wish we had a good answer for. There’s always some variation in hardware components, and even when all components are within spec, a few microseconds difference across a number of components can add up to enough to make the difference between the laptop booting or not. The good news is now that the issue has been identified and resolved, all future Librem 14s will ship with the updated EC firmware, and existing ones will receive it as an update at some point in the future (less critical here, since these devices are functioning normally). The post Wrangling the EC: Adventures in Power Sequencing appeared first on Purism.Hardware assisted root of trust mechanism and coreboot internals

Intel Converged Bootguard and TXT: a root of trust

Intel CBnT merges the functionality provided by TXT and BtG in one Authenticated Code Module (ACM). This is a code module signed by Intel that runs on the main CPU before the traditional x86 reset vector is run at address 0xFFFFFFF0. The job of this ACM is to measure and/or verify the main firmware, depending on the 'profile' that has been set up in the Intel Management Engine (ME). The profile determines what happens in case of a measurement of verification error. Strong policies entirely halt the system in case of failure, while other ones just report errors but still continue booting. The latter is often desirable for servers in a hyperscaler setup such that the system admin can still run diagnostics on the system. A few different components are needed in a working CBnT setup. The ACM was already mentioned. The ACM is found by the hardware by a pointer in the Intel Firmware Interface Table (FIT), which itself is found via a pointer at the fixed location 0xFFFFFFC0. Other necessary components are the Key Manifest (KM) and Boot Policy Manifest (BPM), which are also found in the FIT. The chain of trust is started in the following way: the Intel ME has a fuse which holds the hash of the public key of the KM. This can either be set up with 'fake-fusing' for testing CBnT where the hash can still be changed afterwards. On production systems the hash will be permanently fused. The ACM compares that fused hash to the public key that is inside the KM, which is signed with the KM private key. The KM itself holds a hash of the BPM public key which is compared to the public key stored in the BPM. The BPM is signed with the BPM private key. The role of the BPM is to define what segments of the firmware are Initial Bootblock (IBB). The BPM contains a digest of the IBBs and as such establishes a root of trust if the digest in the BPM matches what is on the flash. More in depth info on this in intel boot guard. This is what Bootguard also did. What CBnT offers on top is the TXT functionality. The IBBs are measured into PCR0 of the TPM. Other TXT functionality like clearing memory or locking down the platform before setting up DRTM with the SINIT ACM is also provided by the CBnT ACM. See the TCG DRTM for more info on this. Merging the TXT functionality makes CBnT ACMs much bigger than BtG ACMs (256K vs 32K depending on the platform). This KM and BPM separation has in mind that there is one hardware owner, but multiple OEMs. The hardware then always gets fused with the key of the owner. Each OEM might want to roll their own firmware and has it's own BPM key. The owner then creates a KM with the OEMs BPM key hash inside it. The OEM can then generate their own BPM that matches the firmware they intend to use. KM and BPM also provide security version numbers that can be enforced. So the same hardware can have different OEMs during its lifetime. The previous OEM won't be able to generate a working CBnT image for the new OEM. The strength of a CBnT setup and the trustworthiness it provides lies in the following things:- the signature verification of the ACM done by the ME/Microcode (Intel)

- ACM signing key remaining private (Intel)

- the verification done by the ACM, which is a closed binary (it's 256K so not so small)

- KM keys remaining private (Manufacturer)

- OEM keys remaining private (OEM), KM security version number can be updated to make OEM keys obsolete though

- The IBB needs continue the chain of trust which is what the next section will be about

Chain of trust

Coreboot essentially supports 2 different methods for setting up a chain of trust. You have measured boot and verified boot. Verified boot is conceptually easier grasp. Each software component that is run after the bootblock is signed with a private key. The trusted bootblock has the corresponding public key and uses that to verify the integrity of the next program. If the signature does not match the binary then the firmware can report an error to let the user know that their system cannot be trusted or the boot process can even be fully halted if that is desired. Securing your firmware using measured boot works a bit differently and involves a TPM. The idea with measured boot is that before a component is used, it is digested and the hash is stored in the extend-only PCR registers of the TPM. When the boot process is done, the user reads out the TPM and if the PCRs don't match with what you expect, you know that the firmware has been tampered with. A common use case is where the TPM can be read out remotely independent from the host. If the TPM PCR values don't match, that system won't be allowed access to the main network. The admin can then take a look at that system, fix potential issues and allow that system back online after those have been resolved. Historically Google first added verified boot to coreboot with their VBOOT implementation. Measured boot was later added as an optional feature to VBOOT. Now measured boot can be used independently from Google's VBOOT. Google's VBOOT was built with ChromeOS devices in mind. Those devices use very varying hardware: different SOCs from Intel, AMD and many ARM SOC vendors. To support all those different SOCs Google uses a common flash based root of trust. The root of trust lies in a read only region of the flash which is a feature of some SPI flash which is kept in place by holding the /WP pin low. The verification mechanism resides in the read only region and verifies the firmware in FW_MAIN_A/B FMAP partitions. The RO region of the flash does hold a full firmware for recovery. This copy of the firmware is also considered trusted. Such a VBOOT setup does not work that well with a root of trust method like CBnT/Bootguard. With Bootguard some initial parts of the firmware are marked as IBB and the ACM will verify those. It's up to the firmware and assets in those IBB to continue the chain of trust and verify the next components that will be loaded. A fully trusted recovery image in 'RO' region would need to be marked as IBB. CBnT/Bootguard were however not designed for that, as the IBBs are then too big. Only code that is required to set up a chain of trust ought to be marked as IBB, not a full firmware in 'RO' region. In more practical terms, if the bootblock is marked as IBB with Bootguard, the romstage that comes after it cannot be a romstage in the in 'RO' FMAP region as there is no verification on it. VBOOT needs to be modified to only load things from FW_MAIN_A/B. Just not populating the RO FMAP with a romstage is not sufficient. An attacker could just take a working Bootguard image and manually add a romstage in the RO cbfs. The solution is to disable the option for a full recovery bootpath in VBOOT. A note about the future: some work is being done to have per cbfs file verification. This would fit the Bootguard use case much better as it removes the need to be careful about what cannot be in the VBOOT RO region. Another difficulty lies in what to mark as IBB. An obvious one is the bootblock as that code gets executed 'first' (well after the ACM has run). But the bootblock accesses other assets, typically quite early in the bootflow. The CPU starts in a bare state: there is no RAM! A solution is to use CPU cache as RAM. This setup is rather tricky and the details are not always public. So sometimes you are obliged to use Intel's FSP-T to set up an environment in which you can execute C code. Calling FSP-T therefore happens in assembly and for this reason verification on the FSP-T binary cannot happen this early. Even finding FSP-T causes problems! FSP-T is a cbfs file and to find cbfs files you have to walk from bottom to top until you find the proper file. This is prone to attacks: someone can modify the image/cbfs such that other non trusted code gets run instead. The solution is to place FSP-T at an address you know at buildtime which the bootblock code jumps to. FSP-T also needs trusted so it has to be marked as IBB and verified by the ACM. Ok, now we are in a C environment but we’re are not there yet. We need to set things up such that we can verify the next parts of the boot process. For that we need the public key which lies in the "GBB" fmap partition. FMAP partitions are found via the "FMAP" fmap partition whose location is known at buildtime. So again both "FMAP" "GBB" need to be marked as IBB, to be verified by the ACM. With VBOOT there is the option to do the verification in a separate stage, verstage. Same problem here too: it's a cbfs file which can only be found at runtime. Here the solution is to link most of the verstage code inside the bootblock. As it turns out this is even a good idea for most x86 platforms using a Google VBOOT setup. You have one stage less so less code duplication. It saves some space and is likely a tiny bit faster as less flash needs to be accessed which is a slow operation. Other things are done in the bootblock like setting up a console. The verbosity of the console is sometimes fetched with board specific methods relying other parts of the flash. So again this needs to be fetched at a location known at buildtime and marked as an IBB or simply avoided or done later in the bootprocess. So the conclusion is that all assets that are used before the chain of trust setup code is run (VBOOT setup or measured boot TPM setup) need to be referenced statically, searching for them cannot be done and they need to marked as IBB with Bootguard.Converged security suite

CSS is an open source project maintained by 9elements. It is written in go, which makes it quite portable. It's a set of tools and libraries related to firmware and firmware security. One such tool is cbnt-prov. It is integrated in the coreboot buildsystem and can properly set things up for Intel CBnT, by generating a KM and BPM. It parses a coreboot image and detects what segments need to be marked as IBB automatically. It is however not just a coreboot specific tool to glue things together for CBnT. It supports dumping information on the CBnT setup for generic UEFI images too. It can take an existing setup, turn it into a configuration file, which can be reused later on, for instance if you want to deploy the same firmware but with different keys. One last important feature is to be able to do validation on an existing image. We are working hard on an equivalent tool for bootguard that will be called bg-prov. We hope to get this ready for production soon. This is a big step forward in the usability of coreboot as previously you were bound to proprietary tools provided by Intel that were only accessible under NDA and has usability issues as they are Microsoft Windows executables. Coreboot is the best open source X86 firmware at this day and having fully free and open source software to cover the common use case of Intel Bootguard and CBnT makes coreboot a more attractive firmware solution. We hope that this improves its market adoption!The Future of Open-Source Firmware on Server Systems

Open-Source Firmware for Host Processors is already quite prominent in the embedded world - we do have a lot of systems running on u-boot or nearly every Chromebook which is not older than 5 years is running on coreboot (Surprise, Surprise!). In addition Intel does officially support coreboot and their Firmware Support Package (FSP) in API mode, which is mandatory to be supported in coreboot. So future is looking bright for the embedded world - But what does the server market looks like?

Disclaimer: All information have been gathered together through public documents or talks on conferences - none of this information has been officially confirmed by any of the SoC vendors.

Open-Source Firmware for Host Processors is already quite prominent in the embedded world - we do have a lot of systems running on u-boot or nearly every Chromebook which is not older than 5 years is running on coreboot (Surprise, Surprise!). In addition Intel does officially support coreboot and their Firmware Support Package (FSP) in API mode, which is mandatory to be supported in coreboot. So future is looking bright for the embedded world - But what does the server market looks like?

Disclaimer: All information have been gathered together through public documents or talks on conferences - none of this information has been officially confirmed by any of the SoC vendors.

The answer is: It depends. It depends on whom you're talking to and which SoC vendor you are talking about.

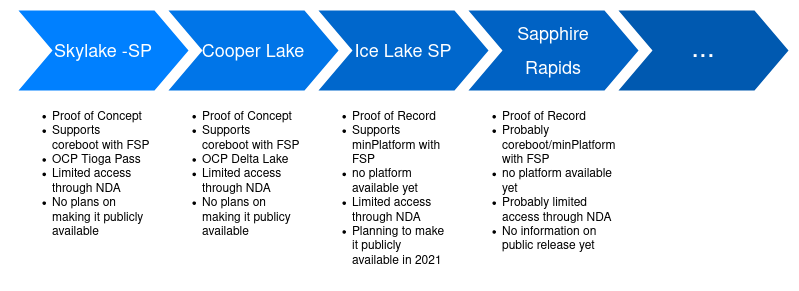

Intel

Intel announced on the OCP Tech Week 2020 (again), that they will support FSP & coreboot on IceLake platforms and beyond - that means that the new upcoming platform Sapphire Rapids SP will also be supported with coreboot and FSP. Intel made quite a transformation over the latest generations of Xeon-SP platforms. There is a Proof of Concept with coreboot and FSP with Skylake-SP on the two socket server platform OCP Tioga Pass. This landed upstream in the coreboot repositories and is still maintained and functional. However the access to the FSP needed to build the platform is still under NDA and will not be maintained by Intel anymore.

ARM

Ampere Computing is a regular guest on the OCP OSF call and presented their LinuxBoot solution on the latest OCP Tech Week 2020. Also Ampere's Arjun Khare presented the latest open-source firmware efforts on the FOSDEM 2021 - by nature ARM always has been more open when it comes to firmware than x86 SoC vendors. Ampere is definitely one of the companies to watch out for in the open-source firmware space. In general ARM is picking up more and more speed in the server world and might be moving into the broader spectrum.AMD

Even though AMD is heavily involved in open-source firmware on their consumer platforms - nothing is publicly known yet about their efforts to support open-source firmware on server platforms. Our friends from 3mdeb made a presentation at the Fosdem2021 about the current state of OSF on AMD platforms. Bottom line: Nothing is publicly known, however AMD is hiring coreboot developers (mainly for their mobile line) but rumors go around that they're working on something. One main push could have been Ron's presentation on the Open-Source Firmware Conferences 2020 on booting an AMD Rome server board with open-source firmware - This has caught quite some attention. Still AMD has not made any information public - so we need to wait if there is more to come.TL;DR

Intel is currently one of the top-pushing companies in the open-source firmware space. Also the OCP's Open System Firmware initiative is redefining the boundaries for server systems - overall we do quite some movement in the open-source firmware world - however most of the information is still not publicly confirmed and can only be shared through NDA's. We hope this changes in the future. 9elements does have a good working relationship with various SoC vendors - we specialized on building open-source firmware for scalable server systems and are able to support the newest generations. We are working on a regular basis with OCP and other scalable server systems. If you would like to talk about OSF on a server system - Get in touch with us!Announcing coreboot 4.14

coreboot 4.14 was released today, on May 10th, 2021.

Since 4.13 there have been 3660 new commits by 215 developers.

Of these, about 50 contributed to coreboot for the first time.

Welcome to the project!

These changes have been all over the place, so that there's no

particular area to focus on when describing this release: We had

improvements to mainboards, to chipsets (including much welcomed

work to open source implementations of what has been blobs before),

to the overall architecture.

Thank you to all developers who made coreboot the great open source

firmware project that it is, and made our code better than ever.

New mainboards

--------------

* AMD Bilby

* AMD Majolica

* GIGABYTE GA-D510UD

* Google Blipper

* Google Brya

* Google Cherry

* Google Collis

* Google Copano

* Google Cozmo

* Google Cret

* Google Drobit

* Google Galtic

* Google Gumboz

* Google Guybrush

* Google Herobrine

* Google Homestar

* Google Katsu

* Google Kracko

* Google Lalala

* Google Makomo

* Google Mancomb

* Google Marzipan

* Google Pirika

* Google Sasuke

* Google Sasukette

* Google Spherion

* Google Storo

* Google Volet

* HP 280 G2

* Intel Alderlake-M RVP

* Intel Alderlake-M RVP with Chrome EC

* Intel Elkhartlake LPDDR4x CRB

* Intel shadowmountain

* Kontron COMe-mAL10

* MSI H81M-P33 (MS-7817 v1.2)

* Pine64 ROCKPro64

* Purism Librem 14

* System76 darp5

* System76 galp3-c

* System76 gaze15

* System76 oryp5

* System76 oryp6

Removed mainboards

------------------

* Google Boldar

* Intel Cannonlake U LPDDR4 RVP

* Intel Cannonlake Y LPDDR4 RVP

Deprecations and incompatible changes

-------------------------------------

### SAR support in VPD for Chrome OS

SAR support in VPD has been deprecated for Chrome OS platforms for > 1

year now. All new Chrome OS platforms have switched to using SAR

tables from CBFS. For the next release, coreboot is updated to align

with the Chrome OS factory changes and hence SAR support in VPD is

deprecated in [CB:51483](https://review.coreboot.org/51483). Starting

with this release, anyone building coreboot for an already released

Chrome OS platform with SAR table in VPD will have to extract the

"wifi_sar" key from VPD and add it as a file to CBFS using following

steps:

* On DUT, read SAR value using `vpd -i RO_VPD -g wifi_sar`

* In coreboot repo, generate CBFS SAR file using:

`echo ${SAR_STRING} > site-local/${BOARD}-sar.hex`

* Add to site-local/Kconfig:

```

config WIFI_SAR_CBFS_FILEPATH

string

default "site-local/${BOARD}-sar.hex"

```

### CBFS stage file format change

[CB:46484](https://review.coreboot.org/46484) changed the in-flash

file format of coreboot stages to prepare for per-file signature

verification. As described in the commit message in more details,

when manipulating stages in a CBFS, the cbfstool build must match the

coreboot image so that they're using the same format: coreboot.rom

and cbfstool must be built from coreboot sources that either both

contain this change or both do not contain this change.

Since stages are usually only handled by the coreboot build system

which builds its own cbfstool (and therefore it always matches

coreboot.rom) this shouldn't be a concern in the vast majority of

scenarios.

Significant changes

-------------------

### AMD SoC cleanup and initial Cezanne APU support

There's initial support for the AMD Cezanne APUs in the tree. This code

hasn't started as a copy of the previous generation, but was based on a

slightly modified version of the example/min86 SoC. During the cleanup

of the existing Picasso SoC code the common parts of the code were

moved to the common AMD SoC code, so that they could be used by the

Cezanne code instead of adding another slightly different copy.

### X86 bootblock layout

The static size C_ENV_BOOTBLOCK_SIZE was mostly dropped in favor of

dynamically allocating the stage size; the Kconfig is still available

to use as a fixed size and to enforce a maximum for selected chipsets.

Linker sections are now top-aligned for a reduced flash footprint and to

maintain the requirements of near jump from reset vector.

### ACPI GNVS framework

SMI handlers for APM_CNT_GNVS_UDPATE were dropped; GNVS pointer to SMM is

now passed from within SMM_MODULE_LOADER. Allocation and initialisations

for common ACPI GNVS table entries were largely moved to one centralized

implementation.

### Intel Xeon Scalable Processor support is now considered mature

Intel Xeon Scalable Processor (Xeon-SP) family [1] is designed

primarily to serve the needs of the server market.

coreboot support for Xeon-SP is in src/soc/intel/xeon_sp directory.

This release has support for SkyLake-SP (SKX-SP) which is the 2nd

generation, and for CooperLake-SP (CPX-SP) which is the 3rd generation

or the latest generation [2] on market.

With this release, the codebase for multiple generations of Xeon-SP

were unified and optimized:

* SKX-SP SoC code is used in OCP TiogaPass mainboard [3]. Support for

this board is in Proof Of Concept Status.

* CPX-SP SoC code is used in OCP DeltaLake mainboard. Support for

this board is in DVT (Design Validation Test) exit equivalent status.

Features supported, (performance/stability) test scopes, known issues,

features gaps are described in [4].

[1] https://www.intel.com/content/www/us/en/products/details/processors/xeon/scalable.html

[2] https://www.intel.com/content/www/us/en/products/docs/processors/xeon/3rd-gen-xeon-scalable-processors-brief.html

[3] ../mainboard/ocp/tiogapass.md

[4] ../mainboard/ocp/deltalake.md

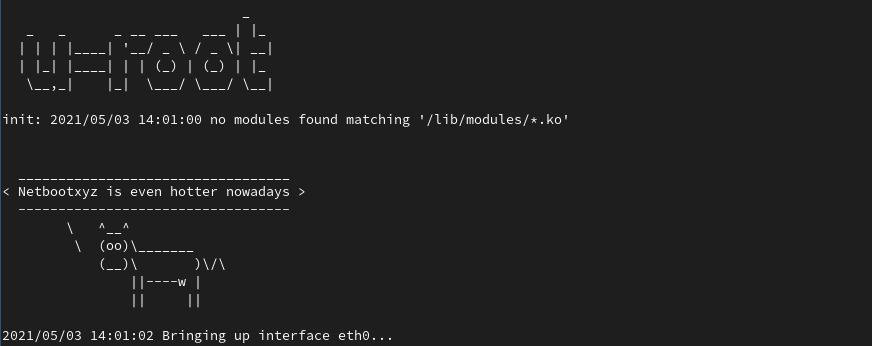

Netboot.xyz is now Part of LinuxBoot

We recently worked on some patches to adopt netboot.xyz and integrate it into LinuxBoot - and it got merged now.

We recently worked on some patches to adopt netboot.xyz and integrate it into LinuxBoot - and it got merged now.

Netboot.xyz

So - what is netboot.xyz? From there website:netboot.xyz is a way to PXE boot various operating system installers or utilities from one place within the BIOS without the need of having to go retrieve the media to run the tool.In our last blog article we already pointed out some development work and what motivated us - basically we need a reliable way to install operating systems on machines sitting either somewhere in a rack not accessible for us, or which do not have any external USB ports. Our former way was to build a busybox image which downloads a disk image containing a minimal Linux operation system into the RAM. Once downloaded we would

dd the image on a hard drive - and off you go.

However that approach needed a lot of manual tooling and adjustment to the current platform we are working on - and netboot.xyz already has a process in place - so adopting this to u-root only seems logical. It's open-source, that's the idea right?

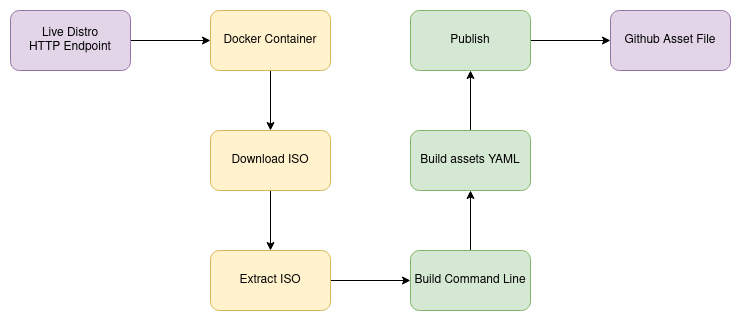

netboot.xyz Image Generation Process

netboot.xyz already has an image processing and generation process in place which we will use to download the images from u-root.

endpoints.yaml file does contain kernel, initrd and squashfs locations in the following manner:

ubuntu-19.10-live-kernel:

path: /ubuntu-core-19.10/releases/download/19.10-055f9330/

files:

- initrd

- vmlinuz

os: ubuntu

version: '19.10'

[...]

ubuntu-19.10-KDE-squash:

path: /ubuntu-squash/releases/download/9854741e-b243fefb/

files:

- filesystem.squashfs

os: ubuntu

version: '19.10'

flavor: KDE

kernel: ubuntu-19.10-live-kernel

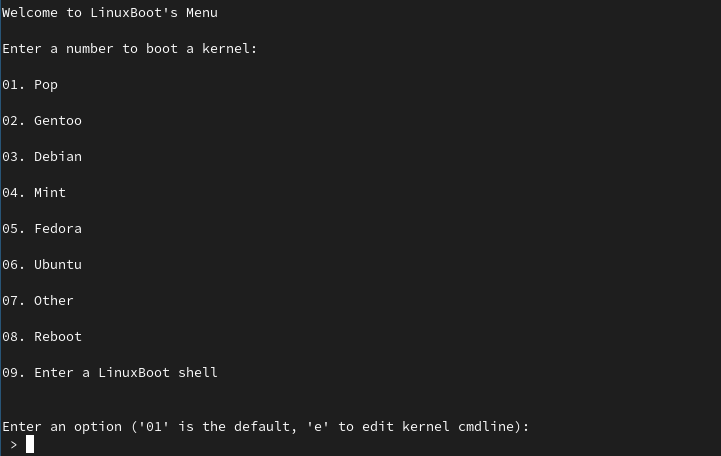

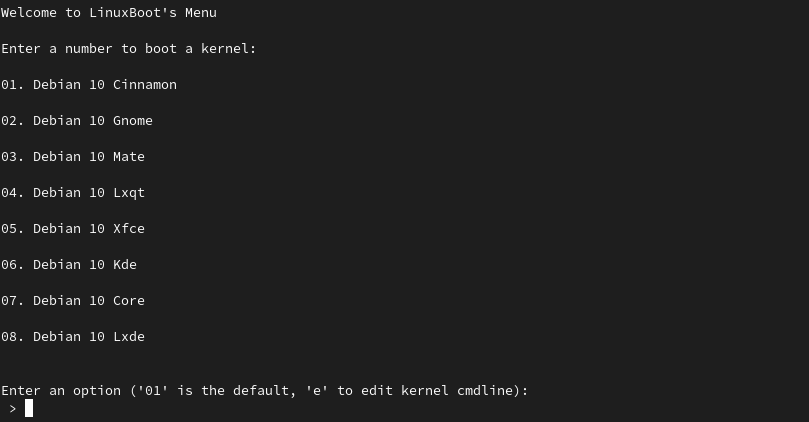

endpoints.yaml file is used to build the u-root netboot.xyz menu:

07 will boot Debian 10 Core.

Be aware - only some major distrobutions have been tested and verified working - Everything in the Other menu can be deemed has experimental and might not work properly.

netboot.xyz provides you a convinent way on how to boot into a live system on your machine. As we are working a lot with server machines where we do not have direct hardware access to, merging netboot.xyz into u-root gives us an easy way to install an operating system on a remote machine during development. If you like to know more about netboot.xyz, check out their homepage. The corresponding code in u-root can be found here. If you like to talk with us about firmware - feel free to contact us!

A short journey to x86 long mode in coreboot on recent Intel platforms

$ qemu-system-x86_64 -M q35 -accel kvm -bios build/coreboot.rom

But, running coreboot's x86_64 code on KVM gave more magic errors than you could find in books about some famous magician with a scarf on his forehead. To summarize:- On recent AMD platforms it stops after entering x86_64 long mode.

- On older Intel platforms everything works.

- On recent Intel platforms after entering long mode every instruction causes a fault, and thus the instruction is emulated by the kernel, which doesn't handle FPU instruction that well...

- On recent Intel platforms the MMU aborts walking page tables and returns the data within the page table itself when looking up some virtual addresses...

coreboot-4.13-241-g52ab788549-dirty Tue Dec 1 18:23:08 UTC 2020 bootblock starting (log level: 7)... CPU: Intel(R) Xeon(R) CPU E3-1240 v6 @ 3.70GHz CPU: ID 906e9, Kabylake H B0, ucode: 000000d5 CPU: AES supported, TXT supported, VT supported MCH: device id 5918 (rev 05) is Kabylake DT 2 PCH: device id a149 (rev 31) is Skylake PCH-H C236 IGD: device id ffff (rev ff) is Unknown FMAP: Found "FLASH" version 1.1 at 0xb10000. FMAP: base = 0xff000000 size = 0x1000000 #areas = 4 FMAP: area COREBOOT found @ b10200 (5176832 bytes) CBFS: Found 'fallback/romstage' @0x80 size 0xe334 BS: bootblock times (exec / console): total (unknown) / 53 ms

coreboot-4.13-241-g52ab788549-dirty Tue Dec 1 18:23:08 UTC 2020 romstage starting (log level: 7)... pm1_sts: 0900 pm1_en: 4000 pm1_cnt: 00000000 gpe0_sts[0]: 00000000 gpe0_en[0]: 00000000 gpe0_sts[1]: 00000000 gpe0_en[1]: 00000000 gpe0_sts[2]: 00000000 gpe0_en[2]: 00000000 gpe0_sts[3]: 00000000 gpe0_en[3]: 00000000 TCO_STS: 0000 0000 GEN_PMCON: e0810200 000018c8 GBLRST_CAUSE: 00000002 00000000 prev_sleep_state 0 FMAP: area COREBOOT found @ b10200 (5176832 bytes) CBFS: Found 'fspm.bin' @0x5fdc0 size 0x63000 POST: 0x34 FMAP: area RW_MRC_CACHE found @ b00000 (65536 bytes) MRC: no data in 'RW_MRC_CACHE' No memory dimm at address A2 No memory dimm at address A4 POST: 0x36 POST: 0x92 ghost It hung at entering FSP-M, which as it's a binary blob, wasn't automatically recompiled to x86_64. A wrapper (CB:48175) , written in assembly, fixed the problem by falling back to x86_32 when calling into FSP. The wrapper will automatically switch back into x86_64 mode when the function returns. This is slow, but as we don't have proper blobs there's no other way around it. memory init console log:coreboot-4.13-242-g04129be978-dirty Tue Dec 1 18:42:20 UTC 2020 romstage starting (log level: 7)... pm1_sts: 0900 pm1_en: 0000 pm1_cnt: 00000000 gpe0_sts[0]: 00000000 gpe0_en[0]: 00000000 gpe0_sts[1]: 00000000 gpe0_en[1]: 00000000 gpe0_sts[2]: 00000000 gpe0_en[2]: 00000000 gpe0_sts[3]: 00000000 gpe0_en[3]: 00000000 TCO_STS: 0000 0000 GEN_PMCON: e0810200 000018c8 GBLRST_CAUSE: 00000002 00000000 prev_sleep_state 0 FMAP: area COREBOOT found @ b10200 (5176832 bytes) CBFS: Found 'fspm.bin' @0x5fdc0 size 0x63000 POST: 0x34 FMAP: area RW_MRC_CACHE found @ b00000 (65536 bytes) MRC: no data in 'RW_MRC_CACHE' No memory dimm at address A2 No memory dimm at address A4 POST: 0x36 POST: 0x92 POST: 0x98 FspMemoryInit returned 0x80000002 POST: 0xe3 FspMemoryInit returned an error!

The FSP was now able to run, but it returned an error Invalid parameter, which was due to the fact that FSP's config structures contained void pointers, which on x86_64 have a different size and doesn't match what FSP expects. Fixing those headers is an ongoing tasks, but was hacked around. SMM stack trash console log:IOAPIC: Initializing IOAPIC at 0xfec00000 IOAPIC: Bootstrap Processor Local APIC = 0x00 IOAPIC: ID = 0x02 PCI: 00:1f.0 init finished in 9 msecs POST: 0x75 POST: 0x75 PCI: 00:1f.2 init RTC Init Set power on after power failure. Disabling ACPI via APMC.

coreboot-4.13-241-g52ab788549-dirty Tue Dec 1 18:23:08 UTC 2020 smm starting (log level: 7)... SMI_STS: PM1 APM SMI#: ACPI disabled. canary 0xcdcdcdcd7f9ff800 != 0x7f9ff800 SMM Handler caused a stack overflow ghostFinally it booted into SMM, but crashed due to stack trashing. That turned out to be a false positive, as the stack canary is the size of a void pointer and is written in x86_32 assembly, but checked in x86_64 C code and thus failed. Writing 4 additional bytes in assembly code fixed the stack canary check and it finally booted.(CB:48215) patch:/* Write canary to the bottom of the stack */ movl stack_size, %eax subl %eax, %ebx /* %ebx(stack_top) - size = %ebx(stack_bottom) */ movl %ebx, (%ebx) + #if ENV_X86_64 + movl $0, 4(%ebx) + #endif

Summarizing it took about a day to add x86_64 support and half of the code needed to be written in assembly code. With those patches in place it should be easier to port additional platforms to x86_64, reducing the time to a few hours. I invite everyone to play with the changes, hack the code and improve it to make this open source project even more awesome.