The laptop industry was still in its infancy back in 1990, but it still faced a core problem that we do today - power and thermal management are hard, but also critical to a good user experience (and potentially to the lifespan of the hardware). This is in the days where DOS and Windows had no memory protection, so handling these problems at the OS level would have been an invitation for someone to overwrite your management code and potentially kill your laptop. The safe option was pushing all of this out to an external management controller of some sort, but vendors in the 90s were the same as vendors now and would do basically anything to avoid having to drop an extra chip on the board. Thankfully(?), Intel had a solution.

The 386SL was released in October 1990 as a low-powered mobile-optimised version of the 386. Critically, it included a feature that let vendors ensure that their power management code could run without OS interference. A small window of RAM was hidden behind the VGA memory[1] and the CPU configured so that various events would cause the CPU to stop executing the OS and jump to this protected region. It could then do whatever power or thermal management tasks were necessary and return control to the OS, which would be none the wiser. Intel called this System Management Mode, and we've never really recovered.

Step forward to the late 90s. USB is now a thing, but even the operating systems that support USB usually don't in their installers (and plenty of operating systems still didn't have USB drivers). The industry needed a transition path, and System Management Mode was there for them. By configuring the chipset to generate a System Management Interrupt (or SMI) whenever the OS tried to access the PS/2 keyboard controller, the CPU could then trap into some SMM code that knew how to talk to USB, figure out what was going on with the USB keyboard, fake up the results and pass them back to the OS. As far as the OS was concerned, it was talking to a normal keyboard controller - but in reality, the "hardware" it was talking to was entirely implemented in software on the CPU.

Since then we've seen even more stuff get crammed into SMM, which is annoying because in general it's much harder for an OS to do interesting things with hardware if the CPU occasionally stops in order to run invisible code to touch hardware resources you were planning on using, and that's even ignoring the fact that operating systems in general don't really appreciate the entire world stopping and then restarting some time later without any notification. So, overall, SMM is a pain for OS vendors.

Change of topic. When Apple moved to x86 CPUs in the mid 2000s, they faced a problem. Their hardware was basically now just a PC, and that meant people were going to try to run their OS on random PC hardware. For various reasons this was unappealing, and so Apple took advantage of the one significant difference between their platforms and generic PCs. x86 Macs have a component called the System Management Controller that (ironically) seems to do a bunch of the stuff that the 386SL was designed to do on the CPU. It runs the fans, it reports hardware information, it controls the keyboard backlight, it does all kinds of things. So Apple embedded a string in the SMC, and the OS tries to read it on boot. If it fails, so does boot[2]. Qemu has

a driver that emulates enough of the SMC that you can provide that string on the command line and boot OS X in qemu, something that's documented further

here.

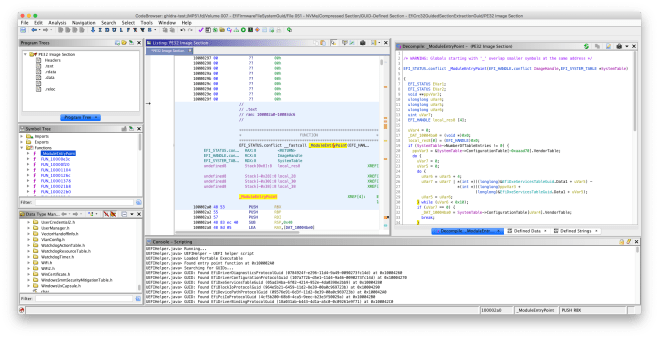

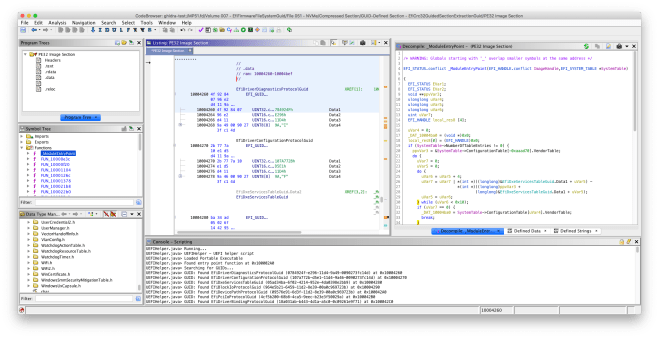

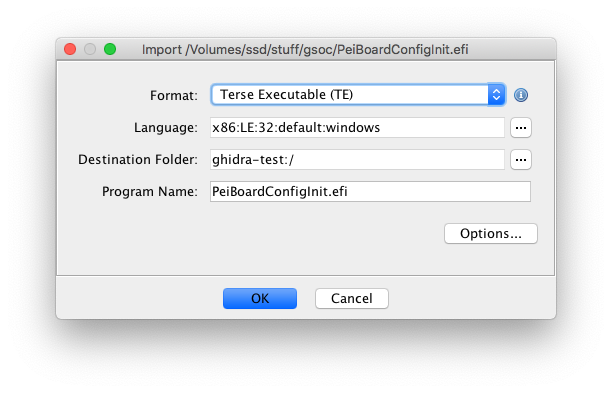

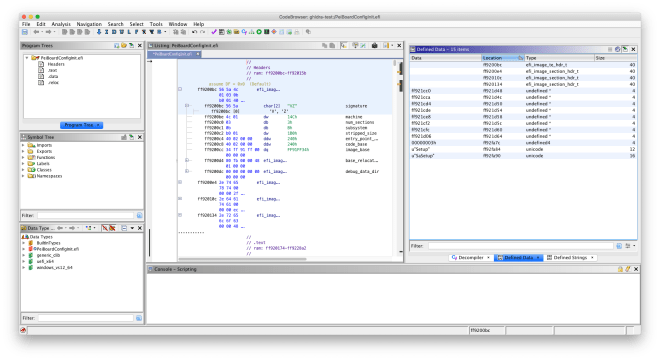

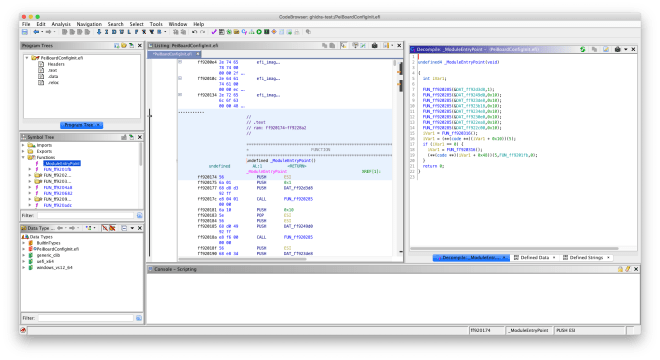

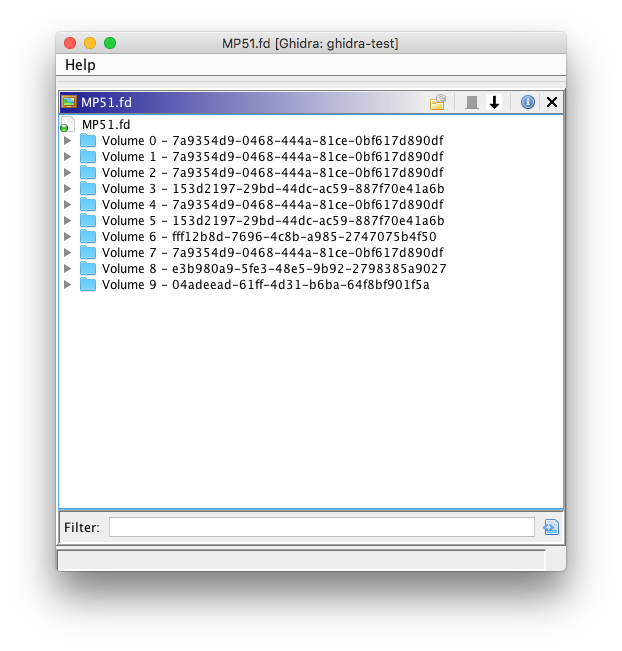

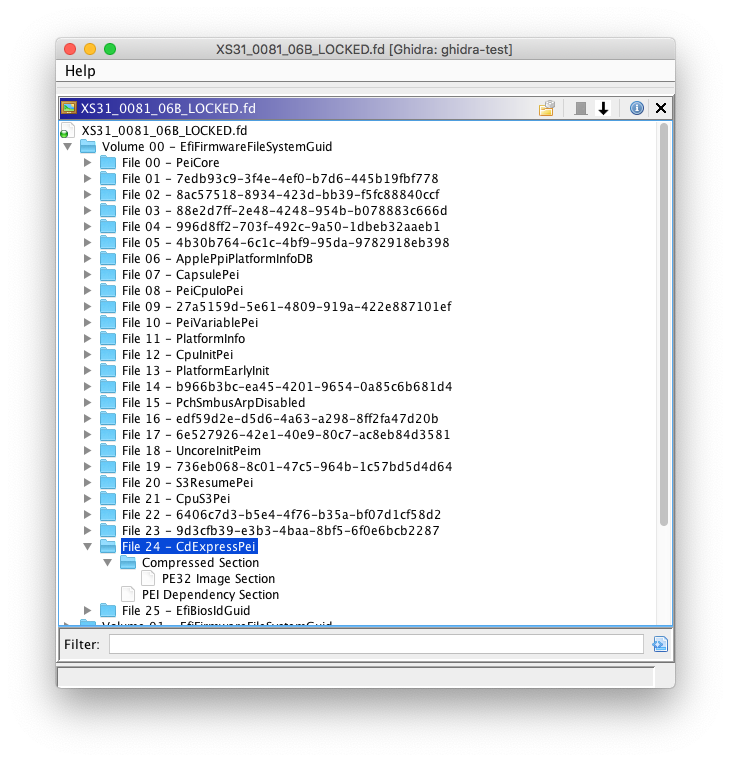

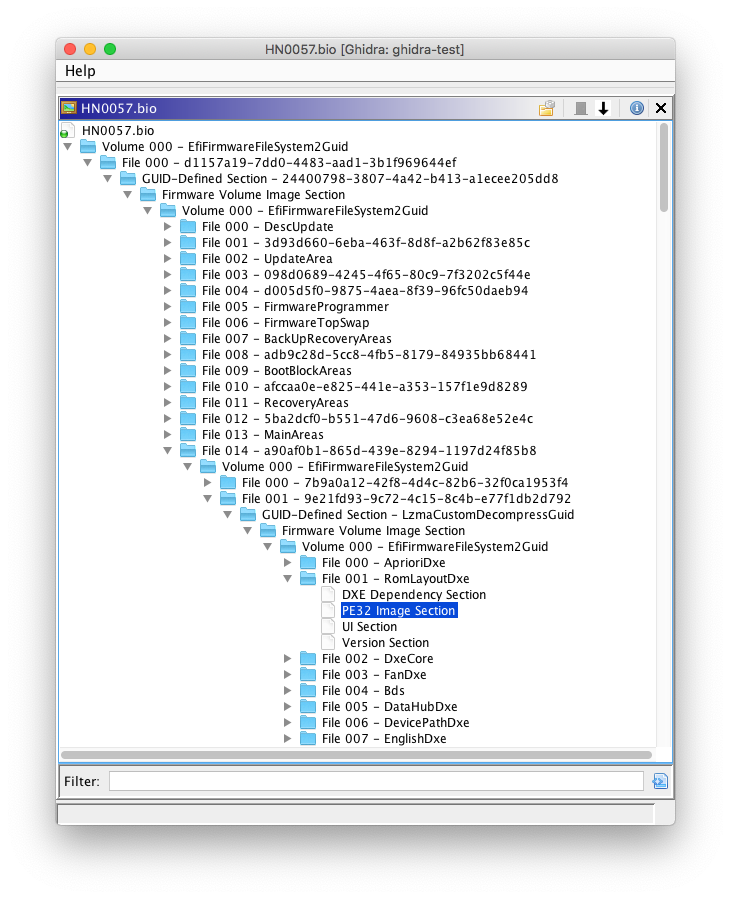

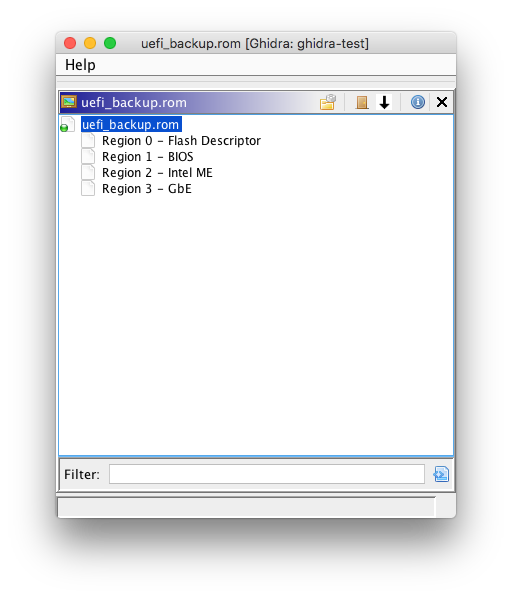

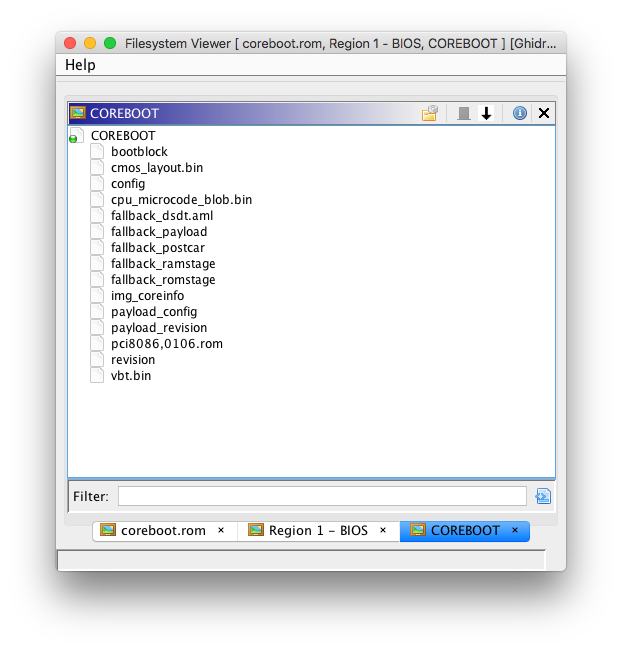

What does this have to do with SMM? It turns out that you can configure x86 chipsets to trap into SMM on arbitrary IO port ranges, and older Macs had SMCs in IO port space[3]. After some fighting with Intel documentation[4] I had Coreboot's SMI handler responding to writes to an arbitrary IO port range. With some more fighting I was able to fake up responses to reads as well. And then I took qemu's SMC emulation driver and merged it into Coreboot's SMM code. Now, accesses to the IO port range that the SMC occupies on real hardware generate SMIs, trap into SMM on the CPU, run the emulation code, handle writes, fake up responses to reads and return control to the OS. From the OS's perspective, this is entirely invisible[5]. We've created hardware where none existed.

The tree where I'm working on this is

here, and I'll see if it's possible to clean this up in a reasonable way to get it merged into mainline Coreboot. Note that this only handles the SMC - actually booting OS X involves a lot more, but that's something for another time.

[1] If the OS attempts to access this range, the chipset directs it to the video card instead of to actual RAM.

[2] It's actually more complicated than that - see

here for more.

[3] IO port space is a weird x86 feature where there's an entire separate IO bus that isn't part of the memory map and which requires different instructions to access. It's low performance but also extremely simple, so hardware that has no performance requirements is often implemented using it.

[4] Some current Intel hardware has two sets of registers defined for setting up which IO ports should trap into SMM. I can't find anything that documents what the relationship between them is, but if you program the obvious ones nothing happens and if you program the ones that are hidden in the section about LPC decoding ranges things suddenly start working.

[5] Eh technically a sufficiently enthusiastic OS could notice that the time it took for the access to occur didn't match what it should on real hardware, or could look at the CPU's count of the number of SMIs that have occurred and correlate that with accesses, but good enough

comments