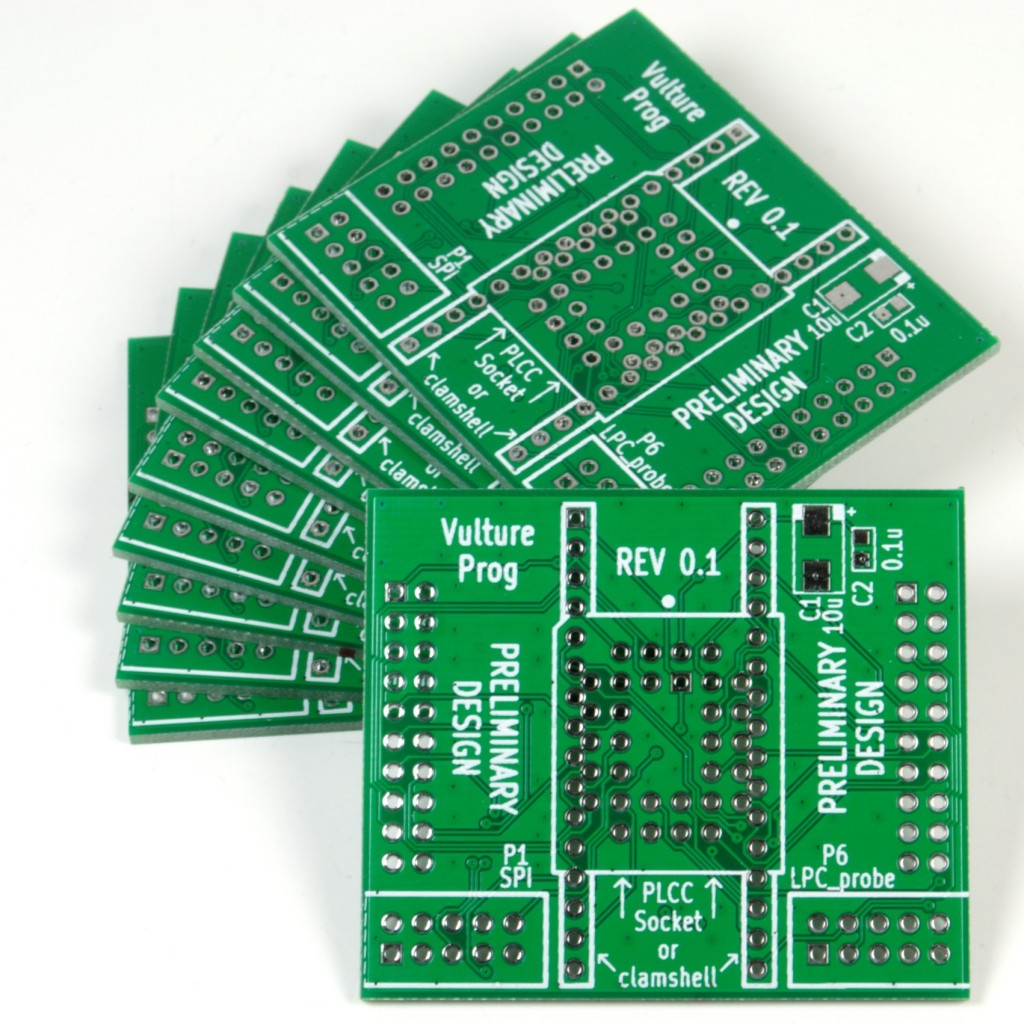

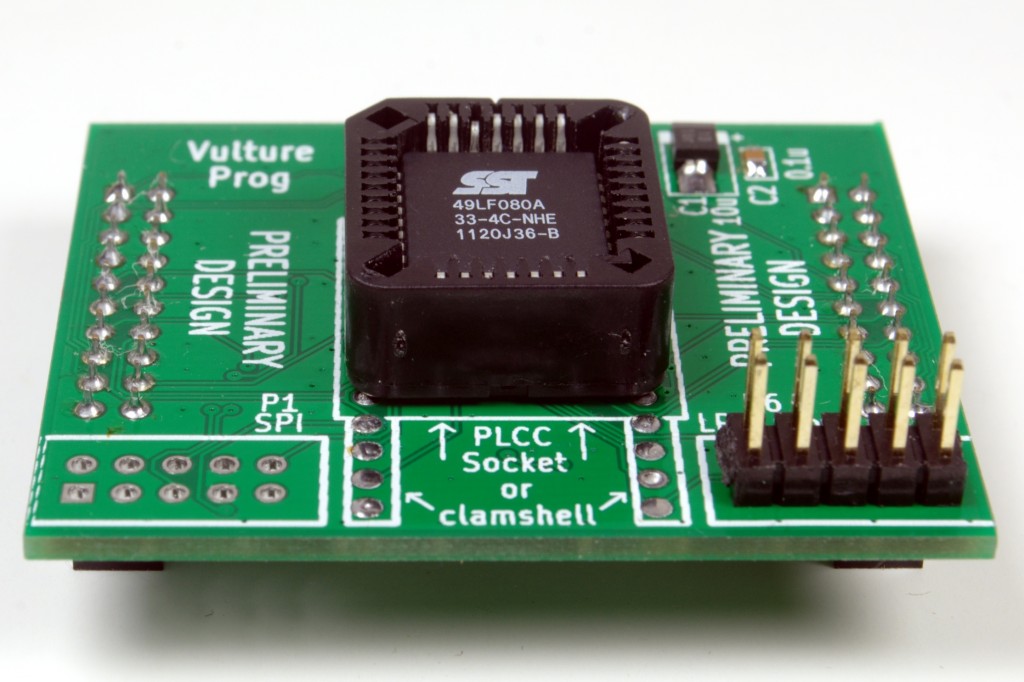

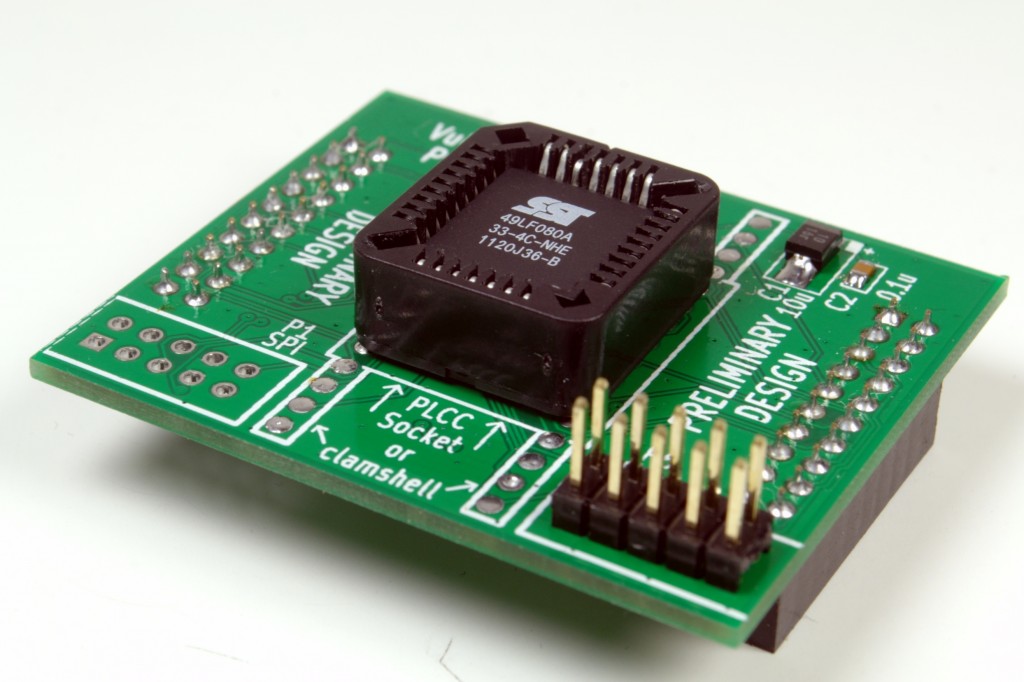

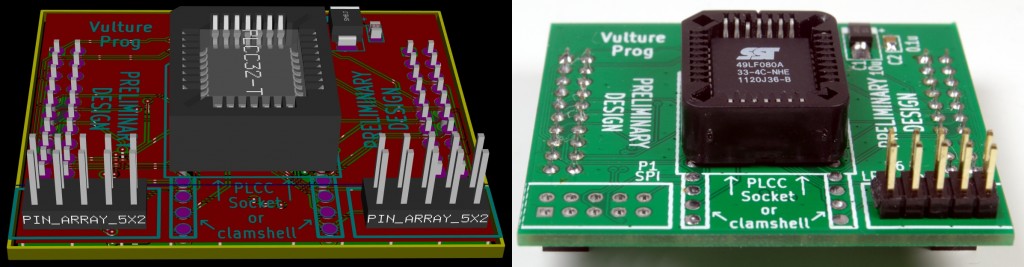

Today is disappointing. I was expecting to have gotten the first batch of VultureProg PCBs. Having  arrived in the US on Tuesday, I did not expect the package to be hovering in New York for most of this week. The need for eating the spaghetti and eliminating the hanging wires is growing more and more urgent with each speed increase. The long wires and insane inductance is already getting in the way of the signals, the lack of physical portability of the test setup is annoying, and the pain of accidentally knocking out a few wires is unbearable. Where are my PCBs ?!!!

arrived in the US on Tuesday, I did not expect the package to be hovering in New York for most of this week. The need for eating the spaghetti and eliminating the hanging wires is growing more and more urgent with each speed increase. The long wires and insane inductance is already getting in the way of the signals, the lack of physical portability of the test setup is annoying, and the pain of accidentally knocking out a few wires is unbearable. Where are my PCBs ?!!!

High speed tests

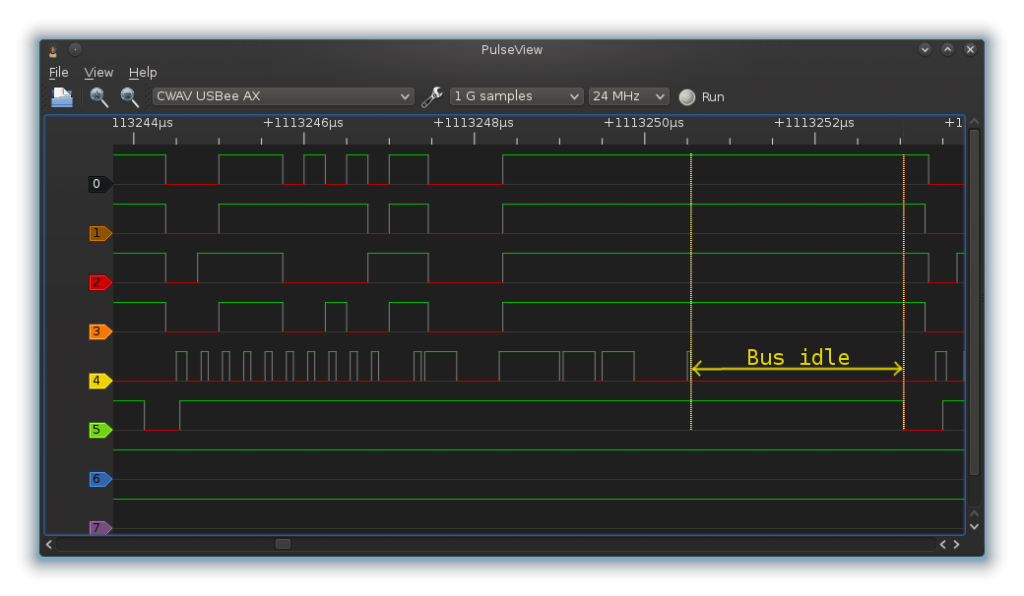

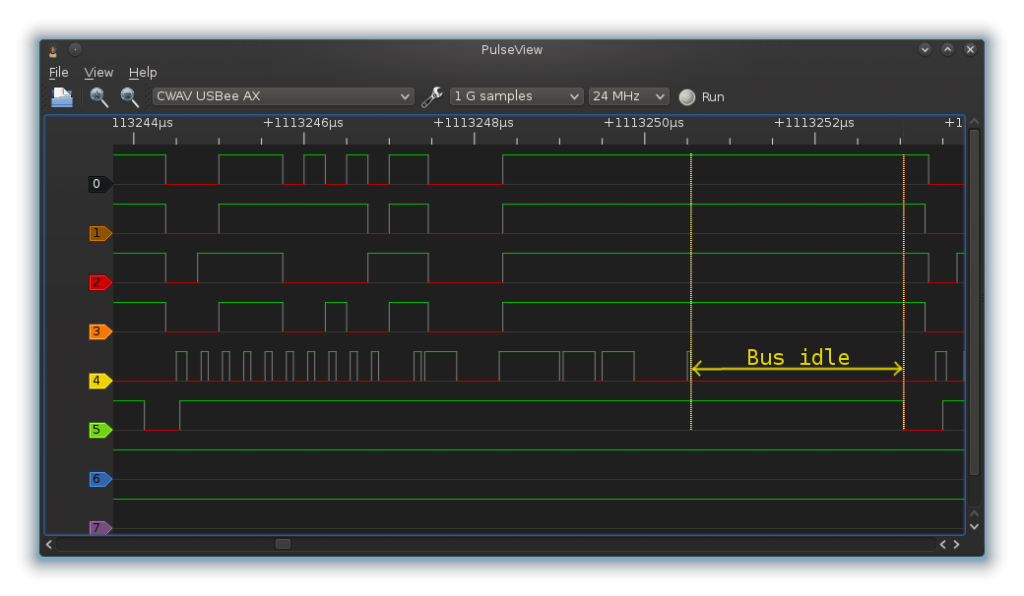

The Stellaris is getting faster, much faster than initially predicted. From the pathetic 23 KiB/s read speed during the first tests, it now comfortably does over 450 KiB/s. The emulated LPC bus goes so fast, that I need to lower the CPU’s core clock down to a measly 16MHz to be able to just capture all the details of the waveforms.

Too much impedance

24MHz logic analyzer + Nyquist theorem = 12+MHz LPC clock

We’re pushing a 12MHz signal through 20+ centimeter hanging wires, routing them through a solder-less breadboard, then a socket, with the added load of probe wires. I was getting random errors or bad data, yet as soon as I disconnected the probe wires, everything magically worked. It must have had something to do with signal rise and fall times. On the Stellaris, this was relatively easy to cheat and fix by increasing the drive strength.

Where’s my bandwidth?

450 KiB/s * 1024 * 17 clocks/byte = 8 MHz LPC clock

Something does not add up. We know we’re running at over 12MHz because we can not sample the signal well, yet the throughput is much smaller. The USB on the Stellaris is easily capable of 1 MiB/s. To quote Seconds from Disaster, “when investigators looked at […], what they found shocked them”:

The LPC bus is idle 30% of the time. We’re driving the bus so fast, that loop overhead is not only noticeable, but significant. Killing this overhead has the potential to bring the speed to 600 KiB/s.

Insect season

It’s a hot summer in Houston, with air conditioning running around the clock. Stepping in the hot, wet weather outside results in an instant cascade of sweat. Insects are crawling from every nick and crevice, fire ants are spawning from the underground in huge mounds, and mosquitoes are raising their own deadly army of high-pitched buzzers. The QiProg and VultureProg trees are no different.

I’ve made the executive decision to fix bugs as soon as they are getting in the way. I intentionally avoid the use of the term found. I can find my own damn bugs, however, it’s fixing them that is the problem. I prefer to have all the pieces in place before I polish and shine them.

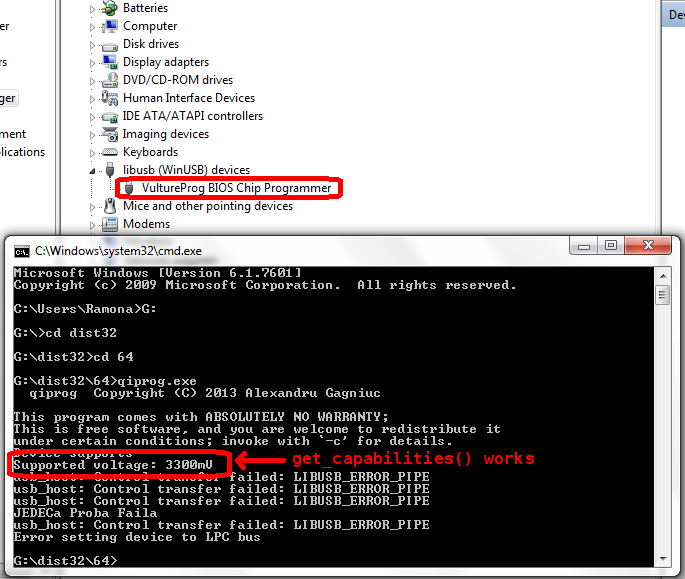

A ripe testing ground

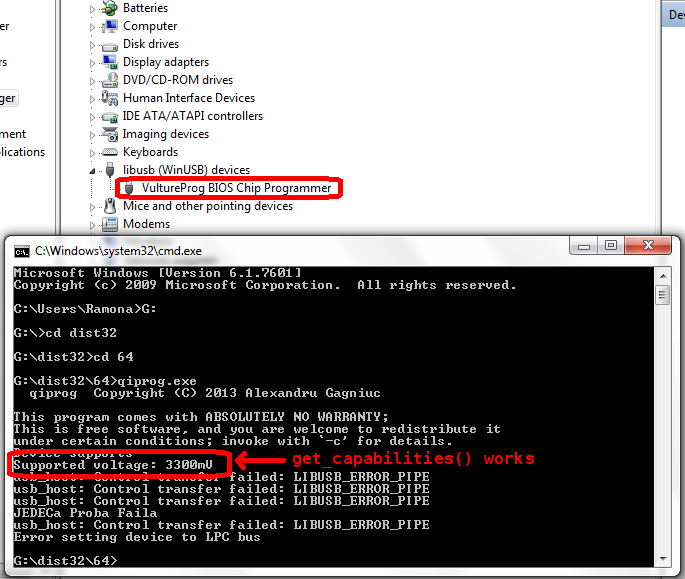

Out of curiosity, I wanted to see if VultureProg will work on Install’n’Pray operating systems (InP). InP are known for their excellent ability to expose even the smallest, most innocent problems, and expose problems where there are none. If VultureProg can work in an InP environment, it most certainly will be stable in unix-like environments. Although it did not work at first, testing on InP has lead to a number of fixes.

Where next?

We have bulk reading completed, which was initially scheduled for week 6. Weeks 7 and 8 look scary, with a lot of goodies, including program and erase functionality. I’ve decided to peek early into how to program and erase LPC chips. I found flashrom’s jedec.c to be of great help: QiProg knows how to byte-program and erase JEDEC-compliant chips. A lot is still scattered in topic branches here and there, waiting for one’s mercy to merge. Somebody please send me some coffee.