Apologies for the late update. The design that I posted in the last post was more challenging than I had thought. However I’m happy to announce that my test hardware that I call ‘coreboot test interface board’ (TIB) is now complete. Only some of the software interface part is remaining in the project. So let me share with you a very quick update of last month. Continue reading GSoC (coreboot): Test interface board complete

Category: coreboot

GSoC [early debugging] More connectivity

A substantial cleanup on CBMEM initialisation is now under review. Goal is to get timestamps and CBMEM console supported on more/most mainboards, but I do not expect to complete this during the official GSoC period, or within the next two weeks time.

One of the goals I originally had set was to have means to re-program the flash chip from pre-OS environment. It is now clear I will not have time to finish (or even start) this part of the project. The decision to delay this part was made quite early on, actually. I learned similar work had already been developed as a combination of FILO+libflashrom, and I hope Stefan’s efforts on another GSoC project will help get this code published in near future. I might still try to get the FILO console appear over usbdebug, adding support of USB communication class (CDC / ACM) in libpayload should not be very difficult.

I have saved some of the most interesting and challenging parts last: having SerialICE and GDB run over usbdebug. Hopefully I get to report about those next week.

GSoC [early debugging] Bridging the gap

ARM is now on the table, as I am bridging the coreboot console from USB to gigabit ethernet, using a BeagleBone board equipped with the USB debug device gadget driver. At first I was a bit concerned of all the latency having an USB-to-ethernet bridge software solution on the communication, so it was now good time to do some measurements.

I used daemon called ser2net to redirect coreboot console TTY to TCP (telnet) and enabled timestamping to estimate the time it takes from power-on to entering payload on amd/persimmon with maximum logging (level=spew).

First, connecting with serial UART @115200bps on x86 host this was total of 15 seconds. Of this it spent 4 seconds in AGESA doing some SPI flash operations and during that time there was no console output.

Repeating same using usbdebug on the BeagleBone OTG port, total time was 9 seconds and again 4 seconds was waiting for SPI completion. I would say we have a rough figure that console output on usbdebug is twice as fast as what super-IO can typically offer.

Driver compilation and patches are updated here: http://www.coreboot.org/EHCI_Gadget_Debug

To use BeagleBone some additional work was needed on coreboot side but BeagleBone Black and other ARM boards where there is no hub between OTG connector and controller should already work.

Retrofitting QiProg for the space age

In the previous two posts, I was developing a bigger issue that has became more and more apparent. The USB QiProg protocol is not completely specified. I didn’t think much of the “TODO” items in Peter’s original protocol. I figured I’ll figure them out as I go along, and I did figure out a lot of little details as I went along. Of course, none of those details related to implementing the big TODOs. So, here I am in week 11(?) with an incomplete protocol.

Last week did not see much coding. I spent some quality time with premium cigars, a pen, and engineering paper. I wrote the name of each function I needed to implement, left a blank space, wrote the name of the next function, and so on. Then, I drew the packets, laid out their structure, went through the process of thought experimentation, crumpled the piece of paper, threw it again, and started from scratch. I eventually settled on a protocol “completion” which I though was acceptable. I wrote it up in digital form and submitted it to Peter for review.

Communication with Peter is asynchronous. Very rarely do we both meet up on IRC to discuss the details in real time. Therefore, I decided to implement the “completion” protocol as-is, and modify the implementation later should the protocol change. This should keep me occupied with coding. I am very happy I can get back to coding. Working out protocol details is exhausting and boring.

It is a bit frustrating. We have firmware code to read, erase, and byte-program, yet the puzzle is not complete. I was expecting the LPC bus mastering to be the most demanding, and USB communication to be an almost transparent add-on. That would have been fun! The reality is the opposite, but, besides the aforementioned frustration, it’s still fun — albeit a different type of “fun”. It’s time to scrap weekend plans for the next couple of weeks, and kick in the overtime. Until next time, get your Stellaris Launchpads, order your VultureProg PCBs, and stay tuned. This baby is going to run smokin’ hot.

A brief progress sheet

Last week, I laid out a list of things to do in order to get more of the protocol finalized. Most of the items are crossed off, however, one little item remains standing. This item, while innocent and seemingly harmless is more painful than falling on your buttocks from a 10 story building on solid granite. Let’s have a look at why this small item is of such significance.

-

new API call set_chip_size()

When people think of QiProg, they think of one gadget with one flash chip connected. This is the common case, and, for the foreseeable future, will be the de-facto way of using QIProg. However, the original USB specification was intended for a broader use case: a programmer with several, individually addressable chips connected. One who observes the qiprog_read_chip_id() call will notice that it translates to a READ_CHIP_ID request over USB. This request will return identification data for up to nine chips. Aye, there’s the rub.

How does this play into set_chip_size()? Simple, set_chip_size for which chip? Do we send a flat list of nine uint32_t sizes, thus only needing one round-trip (control request) for all chips? Do we use the wIndex field of the round-trip, at the cost of needing one such trip for each chip? Once this question is answered, it will determine the answer for set_[erase/write]_[size/command] call and their respective USB round-trips, thus completing the USB protocol, and bringing QiProg to a usable state.

It’s easy to see why this one little detail is a blocker for all other remaining issues. I am leaning towards the use of wIndex (not the glass cleaner). Implementing a new control request in software and firmware is a matter of minutes. Testing it, and making sure it works properly is, at most, a two hour endeavor. Getting the design right: priceless.

GSoC [early debugging] USB submission

Seems I am reaching one of my goals of my original GSoC proposal in bringing usbdebug available as a compile option for most the mainboards with compatible chipset.

As I wrote in an earlier blog, it has been more of a refactoring job on existing code rather than creating something entirely new. There are a series of protocol details where I spotted the implementation took some shortcuts and I have attempted to fix those. I also made improvements to better control how the possible debug dongle connection is probed. There is more testing needed there and also it needs to be fixed to not become excessively slow when dongle is not connected or if it is disconnected before OS is loaded.

At the time of writing, my patches have not yet been submitted to master but are available on the gerrit review board. It is likely there will be some minor fixes, so I will not give exact commit hashes one should checkout and merge. In short: checkout and merge the two topic-branches usbdebug-cfg and usbdebug-lib. In addition, for AMD Agesa boards one needs “AMD AGESA: Place CAR_GLOBAL in BSP stack”.

Now if you only had something to connect it with, I could ask for your help actually testing these changes and finding out if it works! I am still waiting for my BeagleBone Black to arrive to make some fixes to the kernel EHCI debug gadget driver, and the situation with the choice of debug dongles will then improve quite radically. I do have the older BeagleBone and have built custom kernel modules for it and I have started to study how g_dbgp driver interacts with the gadget serial port framework.

What I discovered after picking up a second-hand original BeagleBone was that it does not have its USB port directly connected to the ARM chip, but there is an USB hub chip in between. It might be possible to configure that hub even though our USB requests are limited to a length of max 8 bytes per transaction. The EHCI debug specs do not allow a hub there, but if we can make it work, why not do it?

Experiments of mind

The time for writing code is over. The time to design hardware is over. After seven weeks, the  beginning has come to an abrupt end. I am severely behind schedule. In week seven I was supposed to implement erase functionality — tell the programmer how to erase the chip. This is not done. On the other hand, I have had code for weeks 8 and 9 almost ready, and just merged most of it last week. So, where am I? Am I ahead or behind schedule?

beginning has come to an abrupt end. I am severely behind schedule. In week seven I was supposed to implement erase functionality — tell the programmer how to erase the chip. This is not done. On the other hand, I have had code for weeks 8 and 9 almost ready, and just merged most of it last week. So, where am I? Am I ahead or behind schedule?

The fallacy of preemption

One of the requirements for applying as a GSoC coreboot student was to have a fully established,  schedule from day[-1]. Establishing this schedule was a great experience, and it allowed me to think in depth about the problem and possible solutions — to a certain degree. I picked the steps I considered logical, in the order which I saw logical. Development is never about writing code in the order in which it will be executed. In this particular case, it was much easier to implement bulk writing without a predefined erase/write strategy, opting instead for a default just-do-it approach.

schedule from day[-1]. Establishing this schedule was a great experience, and it allowed me to think in depth about the problem and possible solutions — to a certain degree. I picked the steps I considered logical, in the order which I saw logical. Development is never about writing code in the order in which it will be executed. In this particular case, it was much easier to implement bulk writing without a predefined erase/write strategy, opting instead for a default just-do-it approach.

Why is this approach better than following the schedule, from a development point of view? We have had bulk read partially working for a while now. From the host point of view, reading and writing are symmetrical operations. The bulk of the code (pun definitely intended) is shared between the read and write operation. They both juggle data on the same endpoint. The only difference is the endpoint direction bit. It therefore made sense, once bulk reading was fixed for corner cases, to uses the same code to send data to the programmer. Making the programmer write that data was a matter of a couple of hours. There was no sensible reason to wait an additional two weeks before implementing this last bit.

Software development work is as much about making things work, as it is about the application of programming principles with unquestionable moral authority and correctness. In this case, implementing a trivial extension reusing code fresh in my mind was the preferred approach. Not only did it save me time by not having to re-examine the situation a few weeks from now, it also allows me to have a working program/verify scenario when implementing the erase strategies. As one might imagine, this makes the problem a lot easier. Attempting to preempt and enforce a schedule before the problem is thoroughly explored, occasionally conflicts with best practices of development. With this in mind, I am neither behind, nor ahead of schedule. I am precisely where I need to be.

A matter of experimentation

Most of the infrastructure and code is already in place. Bringing QiProg to completion is no longer an issue of adding functionality through code, but rather completing functionality by connecting the existing code. One issue I discovered after testing the bulk program code was a terrible race condition between read prefetching and the write loop. The prefetch logic incremented the internal address before data arrived. As a result, the new data would get written at the wrong address. Choosing the best solution to the problem is a matter of experimentation.

The “this won’t work because of that” and “what if this” turned into a series of exhausting thought experiments. I have been bugging Peter a lot in the past few days about a series of potential issues. Through tiring thought experimentation, we eventually agreed that the best way to proceed was to abstract a lot more through the API. This is a non-exhaustive list of the decisions we’ve made in the past week:

- set_address() is hidden from the API

- the internal address range is not exhausted once read or written

- read and write operations must not be interdependent, the internal read and write pointers will be distinct (as a side effect, this change also eliminates the race condition depicted above)

- set_address() + readn() turns into read(dev, where, n)

- All API addresses begin at 0. The programmer translates that into an absolute address

- new API call set_chip_size()

- new API call to explicitly erase blocks or sectors (to be defined)

- implicit erase on write can be enabled or disabled (to be defined)

- implicit erase will erase the sector/block right before the first byte of the sector is written

- exposing any USB specific dependencies in the API is strictly forbidden

My focus for the remainder of this week will be to shorten this list as much as possible. Once the dependency between read and write is unshackled, I will be able to erase/program/verify my faithful SST 49LF080A. From here, it will be a matter of finalizing and implementing the last obscure bits of the specification.

The state of QiProg for flashrom

As QiProg is still being finalized, implementing it as a flashrom programmer is still a long ways ahead. I do estimate that weeks 11 and 12 will provide ample time to integrate everything into flashrom, hopefully, in time for the 0.9.8 release.

GSoC (coreboot): Week 5-7 – Redesigning the test-interface-board

In the last blog post I talked about the test-interface-board. There were some concerns about the use of FT232H chip and extra features like voltage and temperature measurement were not required. Keeping in view all the suggestions and improving things on my side I have redesigned the board.

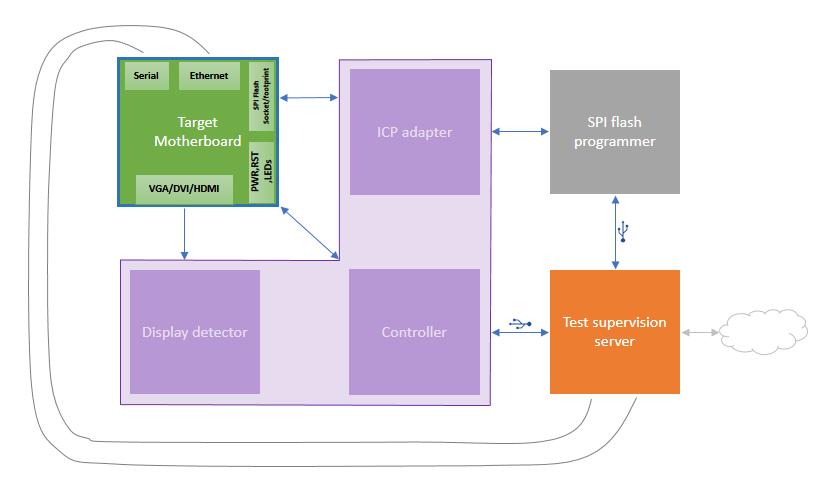

The figure above shows only one target board connected to the test supervision for simplicity. The purple L-block is the test-interface-board. This time, instead of using an integrated flasher I wish to support all existing flashers so I have developed an ICP adapter. An In-Circuit Programming (ICP) adapter contains the firmware flash memory removed from the motherboard and it acts as an electronic change-over switch that connects this flash memory to the motherboard or the programmer as required. It also includes logic-level translation to support as many as possible combinations of programmers and motherboards. The support for programmer voltage is in range 1.2V-5.5V and for motherboard SPI voltage it’s in range 1.65V-3.3V.

As a quick test for VGA initialization I’ve added video detection for Analog video (VGA), DVI and HDMI ports. This checks presence of signals that are only there when video is active. In other words, it checks whether a monitor’s status led would turn green if it’s plugged to the motherboard without actually plugging one. I have prepared schematics, some parts of which are yet to be validated through prototyping. This will all be implemented as an Arduino add-on board (aka Shield) for convenience and the Arduino would act as the controller.

The Serial and Ethernet ports can be connected in the usual way using Serial-to-USB cables, Ethernet cables and hubs. Continue reading GSoC (coreboot): Week 5-7 – Redesigning the test-interface-board

VultureProg command center

It was time to upgrade. I got a new desk last week. For me, a piece of furniture is as boring as watching stainless steel rust (it does rust eventually). A desk, on the other hand, is anathema to replace. I have 20+ wires connected to my workstation going in all sorts of places. I like to keep these wires carefully routed and out of sight, a task made all the more difficult by the lack of any wire management gizmos on new desks. Consequently, after I get a new desk, you are well within your rights to imagine me grabbing my drilling machine, a set of saw cutter bits and an assortment of hole covers — and you need not wait long to see it. Needless to say, getting back up and running has taken a few days, but we are back with a shiny new VultureProg command center (pictured above for your viewing convenience).

The 80-20 rule

In 2011 I used to work developing Android apps. My boss told me “you will spend 20% of the time writing 80% of the code, and you will spend the remaining 80% of the time writing the remaining 20% of the code”. Sadly, QiProg has proven to not be an exception to this rule. Although I have consistently fixed issues and improved the layout of the code, the number of lines of code has been more or less stagnating after the first three weeks of exponential growth. I know how to erase the chip, I know how to program the chip, I know how to read the chip, and I have code to do all that. I just have not yet had the chance to hook it up to the ecosystem.

I am currently working on improving the bulk reading code. There are a few corner cases which are not well handled. As expected, it is taking a lot of time, and I even had to write a special testcase before I started.

Did you want fries with that?

Although we know how to handle every single aspect of the identify/read/erase/write/verify cycle, finding a way of connecting everything together in a simple, elegant, and efficient manner is a different story altogether. We have three API calls for handling erase and write, namely qiprog_set_erase_size, qiprog_set_erase_command, and qiprog_set_write_command. Peter wants everything to work without requiring an explicit erase. In his view, the VultureProg firmware should automatically erase a sector that is being written. Although this would simplify dumb cases where the whole chip or large sections of a chip are written at once, it spells disaster for flashrom’s aggressive optimization. What happens if the first part of a block matches contents, and flashrom decides to write the other part without needing an erase? VultureProg would erase the entire block, then write the second part to the block we never meant to erase. Questions such as this one need to be carefully thought over and elegantly answered. If I blindly start putting all the code together right now, I’ll most certainly have to fix it later.

Postal patronage

I received a very interesting package last week. I had a deal with Idwer to get him rid of a few LPC and FWH flash chips. I was able to take them off his hands for just a few euros, a deal made sweeter by the fact that those chips are no longer being sold anywhere. I also sent Peter a VultureProg board. Since he already has a Stellaris or two, I have just recruited an eager tester.

Can you send me a board?

Short answer, no. Just grab the gerbers and take them to SeeedStudio. They will be able to get boards in your hands for much less than what I pay to ship them. The two SMD capacitors are not hard to assemble. If you still want me to send you an assembled PCB, I will charge $50 (international) or $30 (US). I don’t have the physical time to sit and assemble PCBs by hand, plus, it would be very un-geekish of you to not assemble your own.

GSoC [early debugging] AGESA woes

I took some days off the project for holiday in mid-July; after that there has been some amount of problem solving / headaches with my main development platform (samsung/lumpy), as the upstream coreboot tree still appears to be broken. There is incompatibility with recent binary blob and even after some local fixes I still lose some early debugging with usbdebug.

SAGE Electronic Engineering has kindly provided me an AMD Persimmon board for coreboot development. It took a while for me to get it up and running as it turned out even the very basic documentation of the board connectors and jumpers were behind registration and login on AMD website. Board arrived with coreboot + SeaBIOS combination pre-installed. Seems like flashrom utility works and I also have SPI header connector for recovery purposes. So I should be all equipped for my own build and I hope I get lucky with usbdebug on this hardware.

Now I must say I am not a big fan of the AMD vendorcode named AGESA. Things like CBMEM init and CAR setup are done in a way different fashion compared to the implementation without using vendorcode wrappers. It is a heavy reading, and to get early logging via either CBMEM or usbdebug, I will need to master and possibly modify that code too.

On SerialICE parts I have not made the progress the way I originally had planned, meanwhile some cleanups on low-level PCI configuration and minor usbdebug fixes have been merged. There is more to come on that sector. A fairly complex patchset draft on CBMEM, finalizing that should make it possible to enable CBMEM console for all boards, for ramstage at least.